Redpanda Serverless now Generally Available

Zero-ops simplicity meets enterprise-grade security to unlock production-ready data streaming for builders

Redpanda’s upcoming Iceberg integration makes accessing your streaming data radically more efficient

Organizations using streaming data platforms like Redpanda to optimize their users’ online experiences and make real-time decisions also need offline capabilities to analyze large volumes of historical data while maintaining an up-to-the-minute view of their operations. For this, businesses increasingly deploy data lakehouse architectures on cloud object stores and leverage tools like Apache Spark™, Snowflake, Databricks, ClickHouse, or custom code written in Python or Java.

Consider an e-commerce application that uses Redpanda to track events across a website in real time. Business analysts and data scientists have offline queries like, "What were the most popular products in the last six months grouped by region?” and, “How does traffic to our site change around holidays?” These queries are often written in SQL, and lots of data is processed in a single query rather than a continuous stream.

In this post, we introduce our upcoming native Apache Iceberg™ integration: Iceberg Topics, which dramatically simplifies how you access your streaming data from common data analytics platforms using Iceberg tables.

The upcoming beta of Redpanda’s Iceberg Topics lets you create Iceberg tables from your topics, with or without a schema, then register them with your table catalog and query them with your favorite Iceberg-compatible tools.

For the unfamiliar, Iceberg is a table format that originated at Netflix, became a top-level Apache project, and has become a standard tool for creating large, open data lakes supporting a data lakehouse. Data that Redpanda makes available as Iceberg tables can be read by any supporting database and analytics tools without moving or migrating your data.

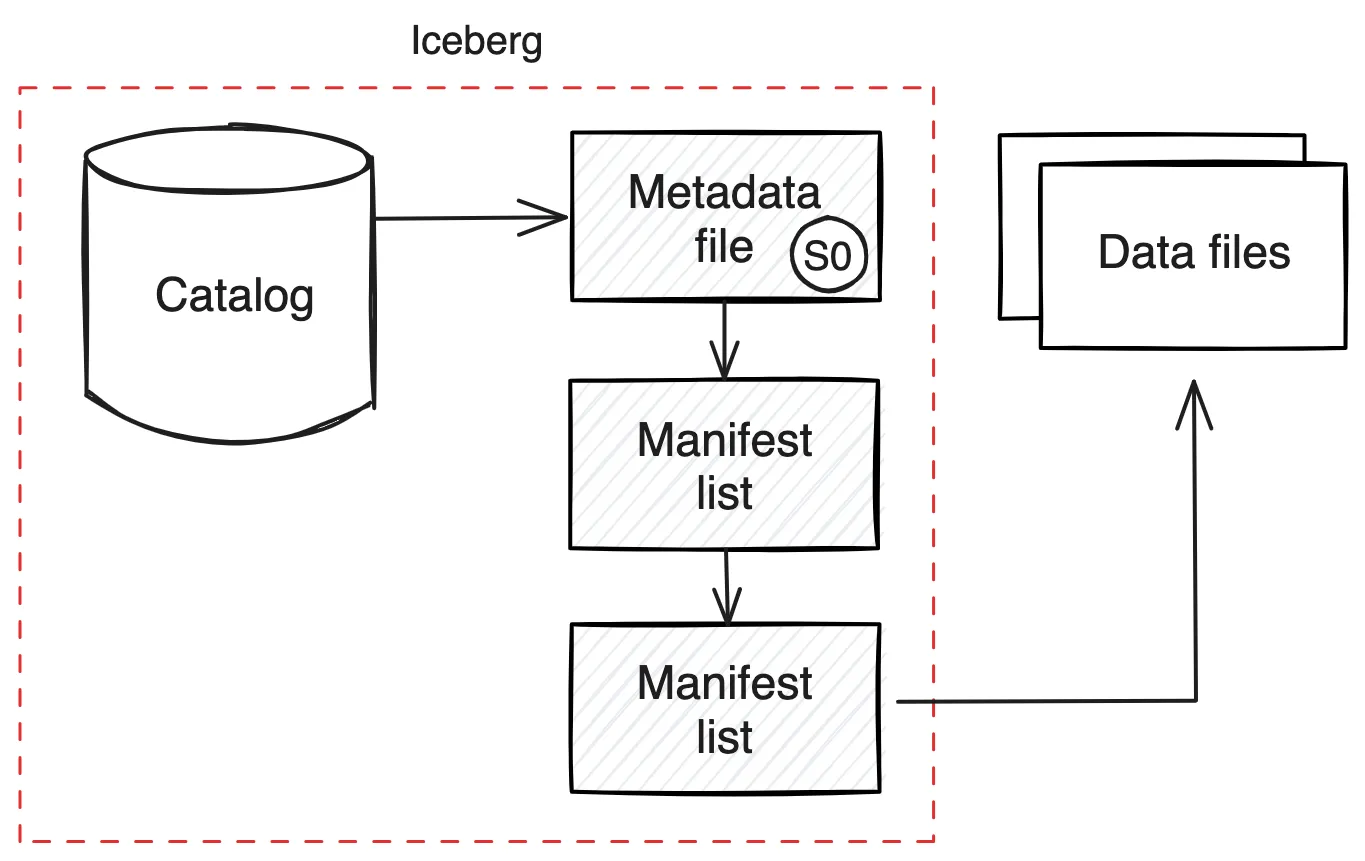

An open table format is a standard way of storing metadata about a collection of data files to provide database-like functionality that’s vendor-neutral and interoperable with different cloud data warehouse platforms and developer tools.

You might say, "I can already store structured data in standard file formats like Parquet, ORC, Avro, and even CSV. Isn't that enough?"

Not quite. To understand table formats and why we need them, it’s helpful to see how we got here. Simply writing your data to a Parquet file on cloud storage may be sufficient for a simple application with a small amount of data, but it quickly becomes unwieldy.

As the data grows, you can no longer rewrite the entire file whenever you want to add new data. That would require reading the entire data set, creating a new Parquet file, and writing it back to cloud storage. For analytics applications that store terabytes or even petabytes of data, that's simply not an option.

One solution is to use directories in cloud storage to represent tables. Each time you have new data to add to the table, you write it to a new file in the directory. Applications that read the data can list and read the files in the directory. This approach avoids rewriting old data whenever you want to write new data and can scale up to massive data volumes. However, it doesn't completely solve the problem.

There are several complications with the "use a directory as a table" approach, but for brevity, we’ll focus on discoverability and accessibility, efficient queries, and schema evolution.

When your table data is just stored in nested directories, mixed with other types of data, it’s hard for analysts to find what they’re looking for. It requires organizational discipline to ensure that all the data is in the right place, as well as programming skills or at least direct knowledge of the data’s precise location to use it.

Table formats like Iceberg let you register tables in a central catalog that are readily discoverable using the mechanisms built into your data lakehouse tooling; for example, Snowflake’s SHOW ICEBERG TABLES command. Table metadata can also be accessed via Iceberg’s standard catalog REST API.

Making these tables available within standard cloud data warehouse environments makes them more accessible for analysts. No coding required. The tables blend seamlessly into existing catalogs of the curated data being leveraged by a broad set of users. Analysts can query the data using ordinary SQL, the common language of the data analytics world. This enables all analysts, not just programmers, to efficiently use the data in your data lake.

If your data is split across many files, applications that query it must read every file. This is unavoidable for queries that truly need all the data, but many queries can be satisfied with only some of the data in the table. For instance, querying "all sales from the week of December 1st" or "all visits to the site originating in Florida" only requires a subset of the table. Reading every file in the table would be wasteful.

Table formats like Iceberg can help. They record metadata about every file in the table, including partitioning information, such as the date range for data in the file. The table format also tracks other information that can be used by a query planner, including the minimum and maximum value in each column for every file and the number of null/non-null values in the column. An analytics platform like Snowflake can then use this information to determine which files might be relevant to the query and, importantly, which are not, and it can exclude the irrelevant ones without even reading them.

As applications evolve, so do their data. The schema of a single data file is typically fixed when written, but that’s rarely true for the table as a whole. New columns are added, obsolete columns are removed, and columns may be renamed to describe their use better. This means the data files making up a table will have different schemas depending on when they were written.

Table formats provide a way to map the schema of each individual data file to the schema for the table as a whole. With table formats, any query will return data matching the current schema of the table rather than the schema that existed when the data was first written. This can include renaming columns, adding default values for columns not present when the file was written, or ignoring columns no longer used in the table. Together, all of these ensure standard SQL semantics for tables comprising collections of diverse data files.

In addition to Iceberg’s many benefits of efficiency and schema evolution, it supports data deletion, registering tables with a catalog to make them easier to manage, and advanced index types. You can read more on the Iceberg project home page.

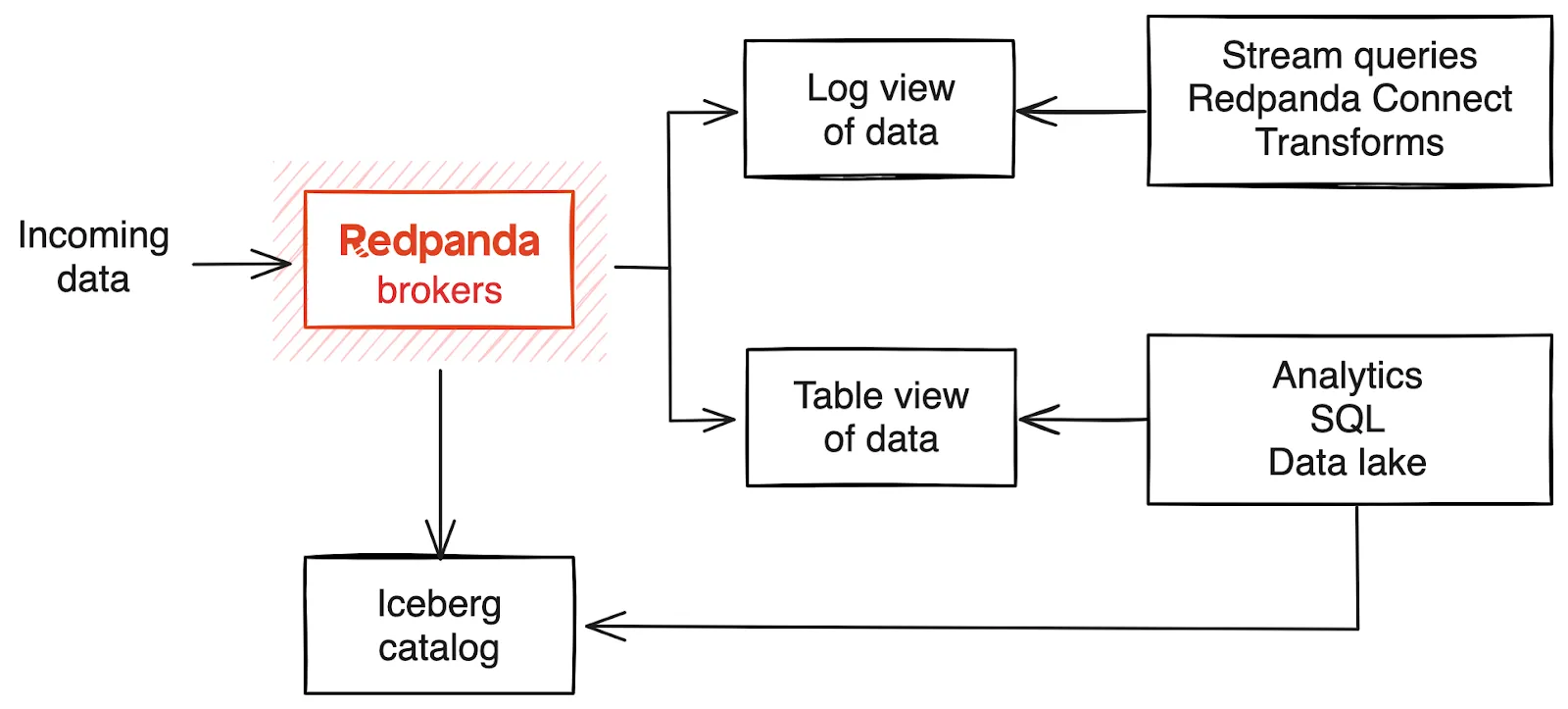

Redpanda gives you highly efficient online streaming data, and Iceberg is today’s standard for offline data analysis in the cloud. Bringing them together gives you the best of both worlds. Your streaming data applications can continue using Redpanda's powerful platform, and your data analytics platforms and tooling can access that same data without migrating it from one system to another.

A typical way to move data between a real-time system like Redpanda and a data lake is through custom data engineering jobs executed in a workflow system like Apache Airflow.

Whenever an analyst needs access to new data, they submit a request to a data engineer to write, test, and deploy a new job, giving the analyst access to that data. These jobs are written in a general-purpose language, meaning that creating new ones can be time-consuming and error-prone. Furthermore, they require reading the data from Redpanda before writing it to its destination, which comes with a performance cost.

Another way to move data from Redpanda to external systems like Iceberg is with Redpanda Connect or Kafka Connect. These flexible systems allow you to move data from just about any source into Redpanda and from Redpanda to just about anywhere else. But this flexibility comes at a cost. These systems require configuration to tell them where to fetch data, where to send it, and how to potentially transform the data in between. Like the custom data engineering jobs described above, they must also read the data from the source location and separately write it to the destination, adding extra “hops” that degrade performance.

Building Iceberg support directly into Redpanda brokers makes storing data from Redpanda in Iceberg much simpler and highly efficient. Rather than configuring a separate component to funnel data to Iceberg, the control over what data lands in your Iceberg data lake is left in the hands of the topic owner. Every Iceberg-enabled topic creates a single Iceberg table. All your data for that topic, no matter what partition it’s written to, appears in a single view. To start writing streaming data to Iceberg, applications can simply set a topic property via any standard Apache Kafka® client, and data begins flowing from the topic into your data lake.

To make this seamless, Redpanda queries the schema registry for your topic to check for a registered schema using the TopicNamingStrategy. If it finds one, Redpanda can derive the Iceberg schema from that, with no configuration on your part. It then writes data files using that schema and creates Iceberg metadata.

There are many advantages to structured data with a known schema. However, Kafka messages often contain simple types that may be readily consumed in SQL queries as is or data formatted as nested JSON that can also be parsed by data lakehouse platforms in SQL queries. In these cases, a strict schema may not be required.

Redpanda’s Iceberg integration also supports these use cases: if you haven’t configured a schema for the topic, Redpanda uses a simple schema consisting of the record’s key, value, and the original message timestamp.

NOTE: This is an upcoming feature that is in beta. The exact configuration is subject to change. Check the Redpanda Docs for the latest updates.

To illustrate our Iceberg integration, here’s an example where we create two new topics and enable the Iceberg integration for them. We then write data to the topics and read it back using a SQL JOIN in ClickHouse.

# Create two topics, one to store 'measurements' and one for dates

$ rpk topic create test_topic --partitions 1 --replicas 1

TOPIC STATUS

test_topic OK

$ rpk topic create test_topic_dates --partitions 1 --replicas 1

TOPIC STATUS

test_topic_dates OK

# Enable data lake support in the cluster

$ rpk cluster config set enable_datalake true

Successfully updated configuration. New configuration version is 3.

# Enable data lake support for test_topic

$ rpk topic alter-config test_topic --set redpanda.iceberg.topic=true

TOPIC STATUS

test_topic OK

# Enable data lake support for test_topic_dates

$ rpk topic alter-config test_topic_dates --set iceberg.topic=true

TOPIC STATUS

test_topic_dates OK

After producing some data to both topics, we can consume it using the standard Kafka API. Here’s an example using rpk, Redpanda’s dev-friendly CLI tool.

$ rpk topic consume test_topic -o 35:36

{

"topic": "test_topic",

"key": "35",

"value": "317.7",

"timestamp": 1725544726868,

"partition": 0,

"offset": 35

}

$ rpk topic consume test_topic_dates -o 35:36

{

"topic": "test_topic_dates",

"key": "35",

"value": "1961-2",

"timestamp": 1725544726869,

"partition": 0,

"offset": 35

}As both topics are Iceberg-enabled, we can access their data using any tool with Iceberg support. ClickHouse is a SQL data warehouse that can read and query Iceberg tables. Accessing these topics via Iceberg lets us use SQL to query their data.

In the first example, we use a WHERE clause to select only a few rows from one topic. Since there’s no schema registered for these topics, they use the “schemaless” Iceberg support that includes a structured key, value, and the message’s timestamp.

-- Full path, username and password redacted

SELECT *

FROM iceberg('.../test_topic/', '...', '...')

WHERE (CAST(Key, 'INT') >= 35)

AND (CAST(Key, 'INT') <= 40)

Query id: 4d598fb9-7ba1-4846-bf00-1ae85b08b43c

┌─Key─┬─Value──┬─────Timestamp─┐

1. │ 35 │ 317.7 │ 1725544726868 │

2. │ 36 │ 318.54 │ 1725544728870 │

3. │ 37 │ 319.48 │ 1725544730872 │

4. │ 38 │ 320.58 │ 1725544732874 │

5. │ 39 │ 319.77 │ 1725544734876 │

6. │ 40 │ 318.56 │ 1725544736877 │

└─────┴────────┴───────────────┘

6 rows in set. Elapsed: 0.029 sec.

In the next query, we use a SQL JOIN to combine the data from the two topics based on their keys.

SELECT *

FROM iceberg('.../test_topic/', '...', '...') AS values

INNER JOIN iceberg('.../test_topic_dates/', '...', '...') AS dates

ON values.Key = dates.Key

WHERE (CAST(Key, 'INT') >= 35)

AND (CAST(Key, 'Int') <= 40)

Query id: 2ea97127-5340-4770-8133-2622eb90353c

┌─Key─┬─Value──┬─────Timestamp─┬─dates.Key─┬─dates.Value─┬─dates.Timestamp─┐

1. │ 35 │ 317.7 │ 1725544726868 │ 35 │ 1961-2 │ 1725544726869 │

2. │ 36 │ 318.54 │ 1725544728870 │ 36 │ 1961-3 │ 1725544728871 │

3. │ 37 │ 319.48 │ 1725544730872 │ 37 │ 1961-4 │ 1725544730873 │

4. │ 38 │ 320.58 │ 1725544732874 │ 38 │ 1961-5 │ 1725544732875 │

5. │ 39 │ 319.77 │ 1725544734876 │ 39 │ 1961-6 │ 1725544734877 │

6. │ 40 │ 318.56 │ 1725544736877 │ 40 │ 1961-7 │ 1725544736878 │

└─────┴────────┴───────────────┴───────────┴─────────────┴─────────────────┘

6 rows in set. Elapsed: 0.014 sec.

This example demonstrated how you can write data to a Redpanda topic and have it automatically available in your Iceberg table — ready to be consumed on platforms like ClickHouse, Snowflake, Databricks, and other Iceberg vendors.

With Redpanda’s Iceberg integration, Redpanda will support a streaming data model for real-time applications and efficient analytical queries using industry-standard tools via Iceberg. The public beta will be available this fall, so sign up below for early access!

Zero-ops simplicity meets enterprise-grade security to unlock production-ready data streaming for builders

Smarter autocomplete, dynamic metadata detection, and swifter collaboration

One architecture for real-time and analytics workloads. Easy to access, governed, and immediately queryable

Subscribe to our VIP (very important panda) mailing list to pounce on the latest blogs, surprise announcements, and community events!

Opt out anytime.