Hello, Agent! A podcast on the agentic enterprise

Learn from the leaders actually shipping and scaling AI agents today

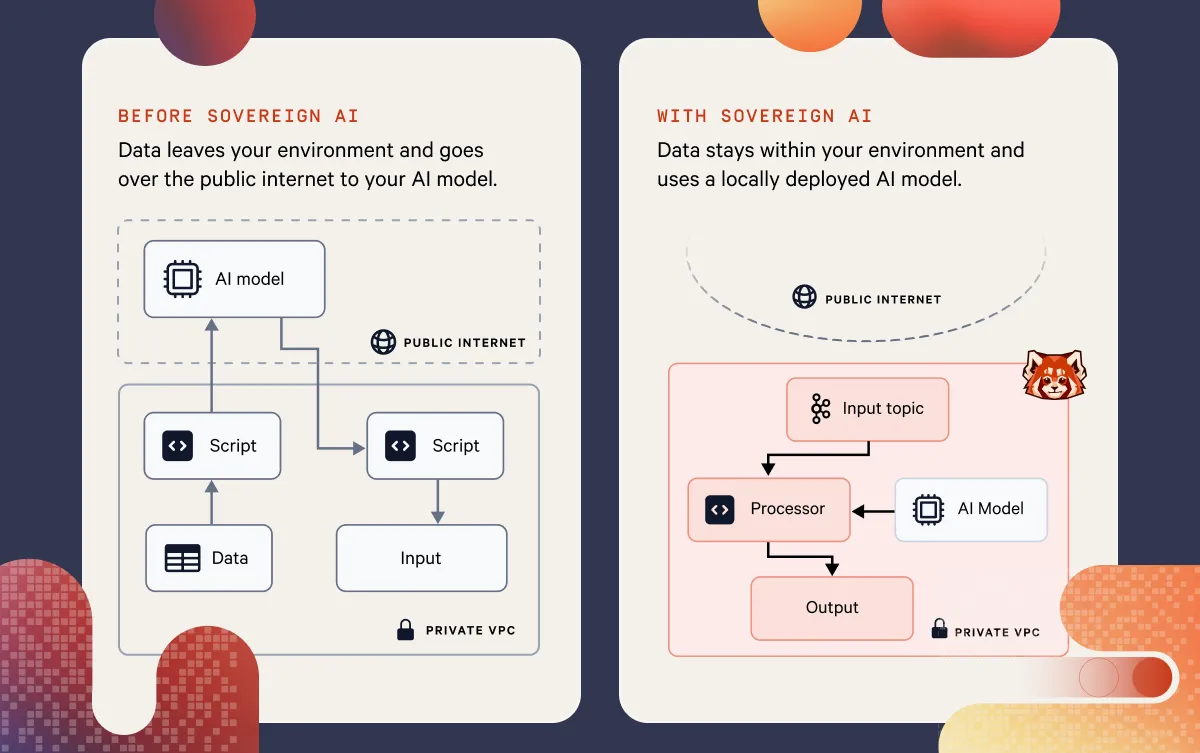

How can the enterprise adopt GenAI without losing data privacy, security, or sovereignty?

The race for AI supremacy is not just a competition between the big tech companies but also sovereign nations. The World Economic Forum defines sovereign AI as “the ability for a nation to build AI with homegrown talent…based on its local policy or national AI strategy”. From a macro-economic, political, and security standpoint, it is clear why countries need to build advanced AI capabilities within the confines of their borders.

At the micro level, the definition of sovereign AI is somewhat analogous. Take the definition above and swap the words “nation” with “organization,” and the meaning confines sovereign AI to the borders of individual companies. Sovereign AI then becomes a company’s competitive advantage — its ability to fine-tune models and instill safety and trust in AI applications.

But how do you ensure data privacy when the easiest way to consume GenAI is using a closed model over a cloud API?

Consider how a software developer might build a simple Q&A chatbot today. They would probably start by downloading a large language model (LLM) framework like LangChain and following one of LangChain’s tutorials. Before long, your company's sensitive data is being sent to OpenAI to retrieve text embeddings, generate chat responses, and is being stored in a vector database also hosted in the cloud.

Agents and agentic AI systems arguably make data privacy even harder. They have the autonomy to make decisions and take actions without being prompted by a human. So, without proper governance, it would be difficult to track what sensitive data is being shared outside of your organization.

To be clear, this is not necessarily a problem. It depends on how permissive your company’s data privacy policy is. Even then, companies like OpenAI take data privacy very seriously and comply with internationally recognized standards like SOC2 and GDPR to keep your data safe within their systems.

This might be enough to satisfy your infosec team, but what are your options if your sensitive data must strictly stay within the confines of your network?.

Mark Zuckerberg says, “open-source AI is the path forward.” In his article, he advocates using Meta’s Llama models, which it defines as open source, as the best option for harnessing the power of GenAI because open-source software, by its transparent nature, is more secure and trustworthy than closed-source alternatives.

The salient point here is that models like Llama can be run anywhere, which is good for enterprises that don’t want to give their proprietary data away because you can bring the model to your data, not the other way around. The key for this staying true is no break-out models that are 10x better than the rest, essentially unlocking new use cases not possible before.

Meta’s stated commitment to open source singles out Llama as being a top choice today, but other so-called open-source models deserve an honorable mention, including Google’s Gemma and Mistral, among others. However, open models aren’t the only way to solve the data privacy problem with GenAI.

Companies like Cohere support private deployments and “bring your own cloud” (BYOC) deployments that allow you to deploy their LLMs into your cloud account and virtual private cloud (VPC). If your sensitive data is already secured in the cloud, then BYOC is another way to bring the model and other data platform tooling to where your data already resides.

Llama and similar models might be free to download and use, but the practical cost of running a frontier LLM on your own infrastructure is certainly not free. I won’t work out the costs of processing tokens in this article, but suffice to say, Nvidia H100 GPUs aren’t cheap, and you’ll need more than one H100 to run Llama’s 405B parameter model at a useful scale.

So, is this the price you must pay for data privacy in the GenAI era?

There’s always a balance, and the counterargument is that not every model needs to run inference on GPUs to perform well. Llama is actually a collection of models, a herd of llamas if you like, that are pre-trained and fine-tuned in various sizes. The smallest is a 1B parameter model lightweight enough to run on a mobile device for things like text summarization. People save a lot of sensitive data on their mobile phones, so being able to perform inference directly on a device is important from a privacy standpoint.

The mode and size of the model you use depends on the use case, but just know that there are options for building GenAI applications that don’t always require super-expensive hardware for inference.

Of course, data privacy is not dead in the age of AI. The solution to the data privacy problem has always been the same. If you have sensitive data and your data privacy policy mandates that data cannot be shared outside of your organization, then the software that comes into contact with that data has to run within your network, GenAI, or otherwise.

What changes is the economics. If you cannot share data with a closed model over a cloud API or simply do not trust the closed model provider, then you have to figure out a way to provide access to an LLM within your network. There are closed model providers like Cohere that support private deployments in the cloud or on-premises, and Meta puts forward a compelling argument for so-called open-source models like Llama.

Data security, data privacy, and data sovereignty should not prohibit the adoption of GenAI technology in the enterprise. Open-source models are constantly improving, and as smaller models become more powerful and the ecosystem of tools around them grows, applications built on GenAI technology can be your organization's most powerful asset.

To learn more about how to keep your enterprise data private, check out Redpanda’s Sovereign AI.

Learn from the leaders actually shipping and scaling AI agents today

Enterprise-grade AI problems require enterprise-grade streaming solutions

Practical strategies to optimize your streaming infrastructure

Subscribe to our VIP (very important panda) mailing list to pounce on the latest blogs, surprise announcements, and community events!

Opt out anytime.