How to build a governed Agentic AI pipeline with Redpanda

Everything you need to move agentic AI initiatives to production — safely

Want to containerize your streaming data applications? Here’s when to use Docker vs Kubernetes.

Real-time data streaming is integral to the standard business operations of many companies. Developers and engineers still need to know, "what's the best way to deploy my application and its dependencies?"

Streaming solutions like Apache Kafka® and Redpanda perform best on an infrastructure that scales easily and offers low latency and fast processing. These goals fit well with the benefits containers provide. Containers make it easier to deploy software quickly, reliably, securely, and dynamically on virtual machines or physical servers, with minimal overhead and configuration requirements…but there's a catch.

Not all container environments are created equal.

The two gorillas in containerization are Docker and Kubernetes, and many developers want to know:

First, let's review what a container is, and then we'll go over the difference between Docker and Kubernetes.

A container is an object that encapsulates an application, its runtime, and its dependencies into an immutable image. In plain terms, for a NodeJS application, the container includes Node, your application, and all the Node modules that your application needs to run.

The image is pushed to a central repository and pulled into any environment with a container runtime engine. When someone deploys a container from the image, the engine makes a copy of the image and boots it, connecting it to resources for storage, networking, and CPU.

You can think of it like a shipping container, if it was possible to clone a shipping container, plug it into the wall, and have it do something. As long as you have a place to plug it, any clone you make of it will work the same way. That's the real benefit of containers - they guarantee that a developer's application will run precisely the same way everywhere.

Deployed containers are ephemeral, which means changes made inside the running container cannot be copied back to the image. When an operator or orchestrator destroys the container, local changes are lost.

Containers work differently from virtual machines because they run within the same context as the host operating system. The runtime engine isolates the container's processes and network traffic from the other host processes. Other than a slight increase in overhead to do this, an application running in a container performs almost as well as an application running on bare metal or directly on a VM.

Most people think of Docker as a container runtime engine, but it's much more. It's an entire platform that contains:

Docker brought container technology to the masses, and it's how most people build containers that are lightweight, fast, and easy to use. Docker is easy to install, and its containers start quickly and don't require much memory or CPU. You can run many Docker containers simultaneously and make the best use of the resources available on a host. Docker makes application upgrades easy - when a developer has a new version, they build a new container, tag it with the version number, and push it to a repository. Operators pull the new image and launch new containers from it.

Docker quickly becomes burdensome if you want to deploy an application and its external dependencies (e.g., a database or a streaming data platform like Redpanda).

Docker and its most basic orchestrator, Docker Compose, are designed for singleton applications in small environments. They're great for development but not great for production. Instead, Docker has a production-level orchestrator known as Swarm, but when compared to Kubernetes, Swarm's performance and feature set fall short.

Kubernetes is a complete container orchestration tool that descends from a tool that Google built for its internal operations. Where Docker focuses primarily on creating containers and running them without any oversight, Kubernetes is a platform for automating the deployment, scaling, and management of containerized applications.

Kubernetes works with containers built using Docker (or other tools that follow the same standard). Instead of using the entire Docker platform, it uses containerd, the open source container runtime interface that Docker also uses. It can connect thousands of nodes across multiple geographic regions or cloud networks, automating the organization and monitoring of all those containers.

As with all things, Kubernetes also has a caveat: it isn't straightforward to set up and has a steep learning curve.

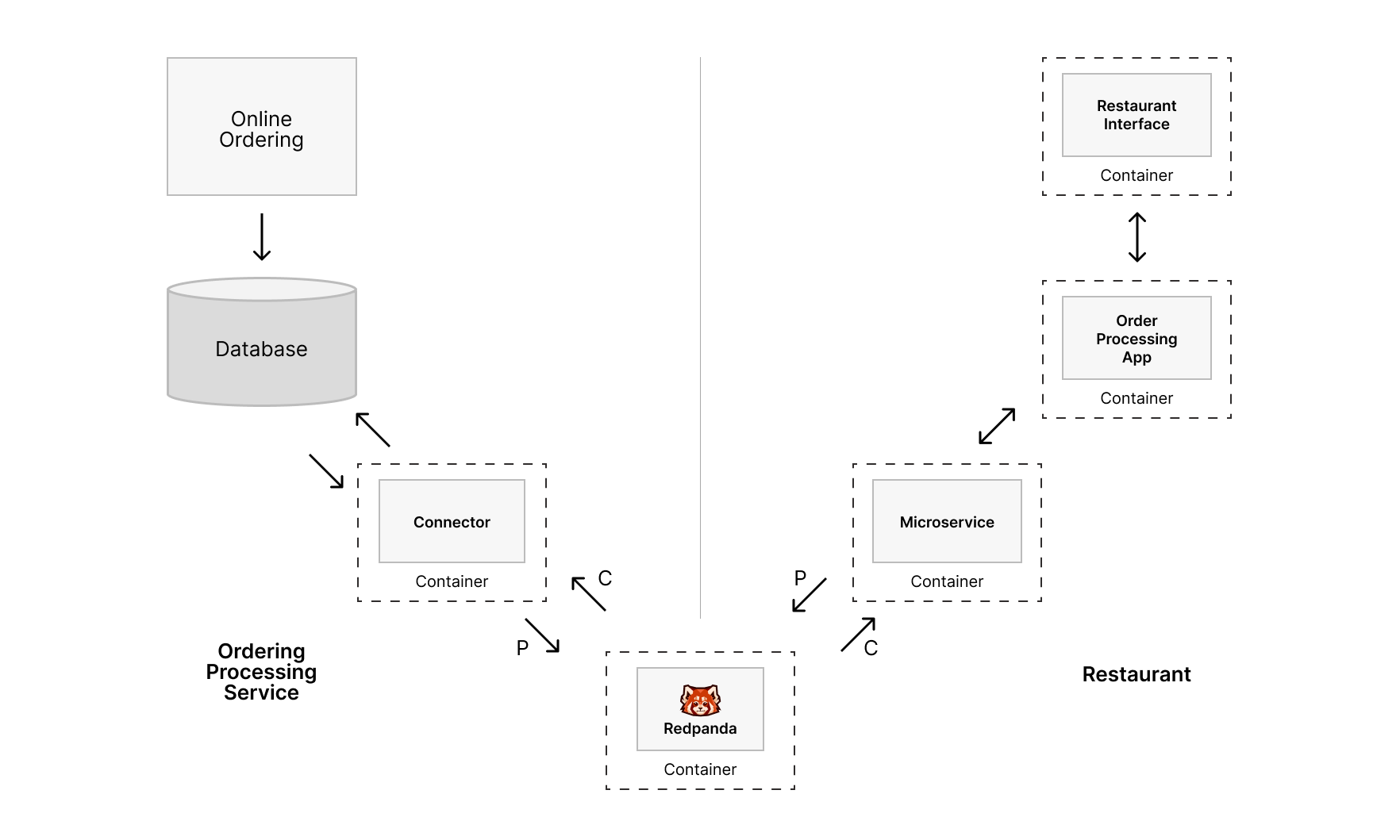

Using containers with streaming data platforms gives you a scalable, cost-effective, and flexible environment. Many organizations today have adopted microservices and distributed architectures, and containerization is the only way to accommodate those architectural paradigms. Containers provide a consistent runtime environment, regardless of where they're deployed (in the cloud or on-premises). They make it easy for developers, especially remote teams, to access and work with their organization's streaming data platform.

Instead of running all of these components on individual machines or VMs, running them inside of containers means that you can upgrade and scale each piece independently. If using Docker, these changes would be manual and impact existing users. If you're using Kubernetes, you can instruct it to bring up new containers, move traffic over to them, and shut down the old ones with minimal disruption.

Redpanda (the streaming data platform) is one part of this application and is also deployed inside a container. It functions normally within the container, consuming real-time events from one application and producing them to another. The fact that Redpanda is deployed in a container doesn't impact the flow of real-time data, and it makes it easier for developers to work with the streaming data platform.

Knowing the differences between Docker and Kubernetes, it may seem that, despite its learning curve, Kubernetes is the best containerization choice for deploying a streaming data platform. The answer is an unsatisfying "maybe."

To understand if Docker or Kubernetes is the best choice for your streaming data platform, you need to consider several factors:

Unless you're running your streaming data platform in a production environment for a sizeable company or processing massive amounts of data each day, Docker will work well for you. It's easier to set up, requires little upfront learning, and delivers huge convenience with minimal overhead.

Docker

Kubernetes

Setup and interaction: Simple

Setup and interaction: More complicated

Create new containers: Yes

Create new containers: No, must be done with additional tools, like Docker

Container management: Manual; somewhat able to be automated with additional tools like Docker Swarm, but not good for large scale

Container management: Fully automated

Some engineers live and die by Kubernetes, but not everything needs to be deployed there.

At the same time, Docker isn't the right tool for massive streaming data services.

That answer isn't satisfying, and we know that. The truth is that deciding whether to use Docker or Kubernetes for data streaming comes down to where you'll be deploying the streaming data platform, how you'll be using it, and how important it is that the platform's operation is automated, maintained, and operational 24 hours a day.

Whether you opt for Kubernetes or Docker, use the solution that will accomplish your objectives as simply as possible. Simplicity will save you and your team time, frustration, and troubleshooting, resulting in a smoother containerized data streaming experience overall.

If you've chosen Redpanda as your architecture's event-streaming component, check out our documentation on deploying Redpanda in Docker. You can also deploy Redpanda on Kubernetes via cloud, kind, or minikube. If you have specific questions about Docker and Kubernetes containerization, reach out in the Redpanda Community!

Subscribe to our VIP (very important panda) mailing list to pounce on the latest blogs, surprise announcements, and community events!

Opt out anytime.