How to optimize real-time data ingestion in Snowflake and Iceberg

Practical strategies to optimize your streaming infrastructure

10x more speed through intentional design. C++, DMA, and autotuning, oh my!

Redpanda allocates RAM specifically for its process and uses it for its own hyper-efficient caching, adjusting buffers according to the actual performance of the underlying hardware. It uses Direct Memory Access (DMA) and builds its cache in a way that aligns with the filesystem, so when it flushes data to disk, it's fast and efficient. This approach helps keep latency down.

Redpanda intentionally allocates one thread to each CPU core and pins it to that core. This is done via the shared-nothing paradigm of ScyllaDB’s Seastar framework. Seastar avoids slow, unscalable locking semantics and issues with caching by pinning each thread to its own core. This forces developers to optimize their code and how it operates, resulting in code that executes very quickly.

Redpanda benefits from tuning the operating system kernel for the best performance. Redpanda has been tested on many architectures to determine which values work best on each platform. This knowledge is rolled into the command 'rpk redpanda tune all', which determines the optimal kernel settings for your platform and sets them.

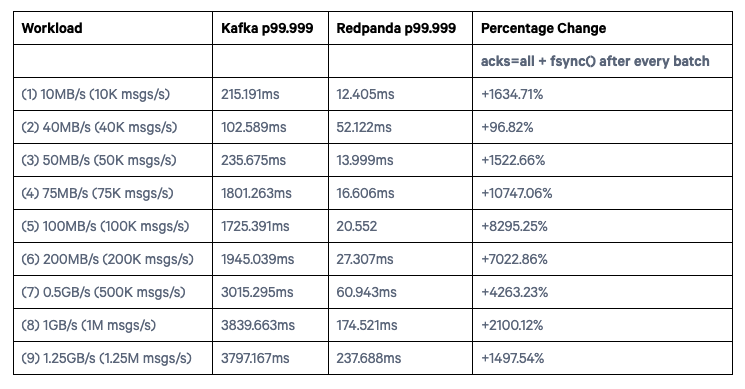

Redpanda outperforms Apache Kafka in every benchmark category. In a series of tests, Redpanda scored between 196% and 10,847% faster than Kafka. For instance, in one of the tests, Kafka's p99999 latency was 1.8 seconds, while Redpanda's was 16 milliseconds.

Redpanda's speed comes from its key architectural differences. It is written in C++ and uses sophisticated performance-enhancing techniques, many of which would be difficult or impossible to use in Java. These include use of thread-local data structures, pinning memory to threads, and directly invoking specific Linux-level libraries like DPDK, io_uring, and O_DIRECT.

In 2021 Redpanda published this article on our blog. In it, we showed that Redpanda outperforms Apache Kafka® in every benchmark category.

That post remains one of the most popular articles on our site, and it's very technical.

Some people just want to know, "How fast is Redpanda?"

TL;DR: It's fast.

For Redpanda, speed is generally measured in comparison to Kafka, and for the purposes of this post, we measure it in the tail latency of produce/consume operations.

We measure tail latency in percentiles, where "p9999" means the slowest speed at which 99.99% of the transactions ran.

Imagine a product that guarantees that 99.99% of all requests will fall below a certain threshold. If there are 100 requests, that seems pretty good.

What happens if there are ten million requests? Then 1,000 of them will be on the downside of that threshold.

That still doesn’t seem bad until you realize that for the request at the 99.99th percentile to be just under the threshold, those other thousand requests had to be really bad.

What if those 1,000 requests were making trades worth millions of dollars?

Is it still okay?

For Redpanda, p9999 isn't enough, so we measured ourselves against Kafka at p99999.

We chose this measurement because as more systems exchange messages, the probability of a single message being affected by latencies above the 99.99th percentile also increases.

The businesses that rely on Redpanda to process data need those messages delivered swiftly and reliably.

For each test, we used three massive nodes for the brokers and two slightly smaller nodes for the clients.

This ensured any bottlenecks would be on the server side, where we were taking our measurements.

Each test measured end-to-end latency, using acks=all to commit the transactions on all replicas before returning to the client that the message was received.

Across nine different tests, Redpanda scored between 196% and 10,847% faster than Kafka.

Those are massive numbers.

To put the larger number into perspective, for one of the tests, Kafka's p99999 latency was 1.8 seconds, and Redpanda's was 16 milliseconds.

Redpanda's blazing speed comes from its key architectural differences.

Kafka is written in Scala, compiled into Java bytecode, and run in the Java Virtual Machine.

Redpanda is written in C++ and makes use of a number of sophisticated performance-enhancing techniques, many of which would be difficult or impossible to use in Java. These include use of thread-local data structures, pinning memory to threads (with libhwloc), and directly invoking specific Linux-level libraries like DPDK (more efficient packet processing), io_uring (efficient asynchronous disk I/O), and O_DIRECT (sparse filesystem use). If you’re interested in the specifics of how we use these capabilities, check out this presentation.

C++ makes Redpanda faster out of the gate, and we build on that with how we interact with the operating system.

Modern operating systems use free RAM for caching files that would otherwise have to be read from and written to disk.

This is called the "page cache," and it was created to speed up reads and writes for files located on slow, rotating disk platters.

Solid State Disks (SSDs) improve access times, but not by much. Working with RAM is still orders of magnitude faster than working with disks.

Partitions in Kafka and Redpanda behave differently than files.

When Kafka makes a fetch request, the operating system responds by executing functionality that would benefit an application loading a file. It performs locking and other behaviors that introduce latency where we don't want it.

For Kafka clusters that run in Kubernetes, sharing the page cache between multiple applications also means that the amount of data that Kafka can cache is never guaranteed.

Redpanda uses an append-only log with ordered reads, and we know exactly how much data will be read and written in any request.

Instead of using the page cache, we allocate RAM specifically for the Redpanda process. We use it for our own hyper-efficient caching, adjusting buffers according to the actual performance of the underlying hardware.

We use Direct Memory Access (DMA) and build our cache in a way that aligns with the filesystem, so when we flush data to disk, it's fast and efficient.

Furthermore, because our cache is shared by all the open files being read/written by Redpanda, when there are spikes in message volume the most heavily used partitions have immediate access to additional buffer space. This approach helps keep latency down.

It's tempting to spin up an application on a server and call it done.

Latency-sensitive applications like Redpanda and Kafka benefit from the additional step of tuning the operating system kernel for the best performance.

Tuning comes with its own challenges: namely, how do you know what parameter to tune or what value to set?

Setting an incorrect value can have no effect, or worse, it can make the application perform poorly for what it's trying to do.

If you change too many variables, you don't know which one caused a change.

We've tested Redpanda on many, many architectures and determined which values work best on each platform.

We rolled all of this into rpk redpanda tune all.

This command determines the optimal kernel settings for your platform and sets them so you don't have to figure anything out.

Can I get a "Boom, done?"

Boom. 💥 Done.

PRO TIP: This command is only suited for production servers, and you probably shouldn't run it on your development machine.

Threading sits at the core of applications that process data.

It enables parallel processing of work instead of blocking new work while serially processing the current task.

Threads are a part of the CPU architecture for the same reason.

A CPU with multiple cores can execute tasks in parallel, appearing faster because it gets more done.

Threads in an application are assigned to a CPU core by the operating system. They can usually move between cores, but Redpanda intentionally allocates one thread to each CPU core and pins it to that core.

It doesn't get to move.

This is done via the shared-nothing paradigm of ScyllaDB’s Seastar framework. Seastar doesn’t use expensive shared memory across threads. Instead, it avoids slow, unscalable locking semantics and issues with caching by pinning each thread to its own core.

Because of Seastar, everything in Redpanda that would use that core runs through that thread.

We then specify that no instruction can block for more than 500 microseconds. If it does, we see it during development and testing. We treat it as a bug, and we fix it.

This forces our developers to optimize their code and how it operates.

Or, said differently, it forces us to write code that executes very, very quickly.

For centuries, people could travel from New York to London on a boat. This was the way it was done.

Airplanes changed everything.

With air travel, people could do things that they had never thought of because everything was suddenly faster.

Developers who use Redpanda rely on it to be consistently fast and safe.

When "fast" is the default environment you're working with, your applications are no longer constrained. You can do more, confident that you'll get better performance because Redpanda is a streaming data platform built for today's hardware and tomorrow's applications.

Specifically, some applications our customers told us were not practical with Kafka’s latency characteristics include use cases in algorithmic trading and trade clearing, real-time gaming, SIEM use cases with strict SLAs, oil pipeline jitter monitoring, and various edge-based IoT use cases.

These applications depend in particular on reliable tail latency lower than Kafka can provide, which means that without Redpanda, they would have been implemented in a fully custom solution, or not at all.

The ability to deliver on these use cases with an existing system using the well-known Kafka APIs drives massive value for these customers.

Redpanda. What will you build with it?

Tell us in the Redpanda Community on Slack, follow us on Twitter, and check out our source-available GitHub repo here.

Practical strategies to optimize your streaming infrastructure

A realistic look at where AI is now and where it’s headed

Organizations are moving to data streaming for better business value. Here’s how to migrate the easy way

Subscribe to our VIP (very important panda) mailing list to pounce on the latest blogs, surprise announcements, and community events!

Opt out anytime.