How to build a governed Agentic AI pipeline with Redpanda

Everything you need to move agentic AI initiatives to production — safely

Many companies run K8s in private environments for security and control. Redpanda’s single binary architecture makes it easy to support them. Here's what you should know.

Running Redpanda in Kubernetes (K8s) is a common deployment method for many Redpanda users, both in the cloud and on-premises, that’s supported both by Redpanda Operator and Helm Chart.

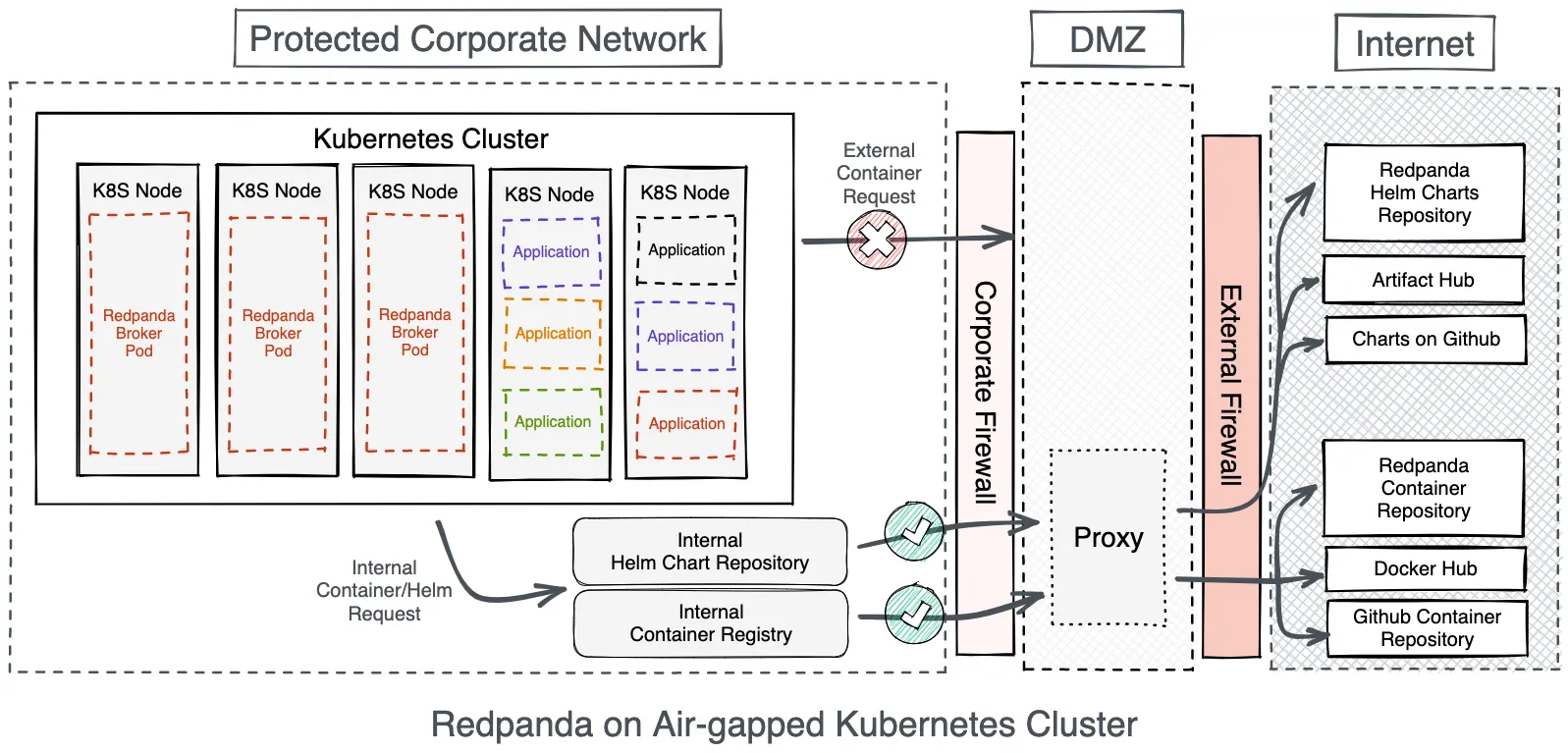

In many enterprises, however, Kubernetes is deployed in a restricted, multi-tenant environment that’s shared across the organization and tightly controlled. These clusters are commonly described as “air-gapped”.

In these deployments, developers and application owners may be restricted in what they install straight from container registries on the internet. The Kubernetes clusters may be wired to a registry proxy, or only allowed to pull containers from a private registry so that operations and information security teams can review and approve new applications to the environment.

In this post, we’ll discuss the considerations to keep in mind when successfully implementing Redpanda in a private, air-gapped Kubernetes cluster.

Redpanda is designed to minimize the number of components and subsystems required to get a stable, production-grade cluster running. At a minimum, you’ll need the Redpanda container when running in Kubernetes. If you need a browser-based management user interface, you’ll also want the Redpanda Console container. Additionally, if you’re using any connectors to feed data into or from Redpanda, you may want the Redpanda Connector container.

Redpanda hosts its own container registry. The container image URIs are:

If you need a specific tagged version, you can replace the word latest with the appropriate version string. Alternatively, you can pull these images from Docker Hub:

Note: If you’re going to implement a TLS-enabled Redpanda cluster, you’ll need the Jetstack cert-manager container as well.

To import these images into your air-gapped repository, refer to your repository’s documentation. If your repository acts as a proxy, you can use the appropriate docker pull or kubectl pull to help seed them into the proxy’s cache.

The Helm charts for Redpanda and Redpanda Console are hosted in both Redpanda Helm chart Github project and the Redpanda chart repository. The option you choose will depend upon your internet access policy.

If you can proxy the Helm chart repository setup and installation process, use the Chart repository. If your environment is fully disconnected, you may need to manually download the latest Helm chart release and install it from the local tarball.

To configure Helm to use a proxy, you can set the HTTP_PROXY and HTTPS_PROXY environment variables in your shell prior to running the helm repo add and helm install. Depending on your local network configuration, you may also need to set the NO_PROXY environment variable to prevent Helm from going through the proxy to interact with Kubernetes.

When configuring your chart, copy the provided values.yaml to myvalues.yaml and make your localized changes here. Here's a proxy download example:

$ export HTTP_PROXY=http://proxy:3128/

$ export HTTPS_PROXY=http://proxy:3128/

$ export NO_PROXY=localhost,127.0.0.1,0.0.0.0,10.43.0.0/16

$ helm repo add redpanda https://charts.redpanda.com/

$ helm update

$ helm install -f myvalues.yaml redpanda redpandaIf you can’t use a proxy, see the helm install instructions for adding a locally downloaded chart. Here's a local download example:

$ export LATEST=$(curl -s https://api.github.com/repos/redpanda-data/helm-charts/releases/latest \

| jq -r '.assets[].browser_download_url')

$ helm pull ${LATEST}

$ ls -ld redpanda

drwxr-xr-x@ 13 user1 staff 416 Mar 16 18:04 redpanda

$ helm install -f myvalues.yaml redpanda ./redpandaWhen designing your Redpanda cluster for Kubernetes, you’ll need to decide how Kafka clients will connect to the brokers. Within Kubernetes, there are three mechanisms for enabling this:

The option you choose will determine where clients will be able to connect from. Here’s a quick breakdown of when to use what.

If you only need to connect to Redpanda from other containers within the Kubernetes cluster, you can choose ClusterIP. You would connect to the Redpanda broker pods via the internal Kubernetes DNS name for each pod. For example, redpanda-0.redpanda.redpanda-ns.svc.cluster.local is the redpanda-0 broker in the Redpanda cluster, running in the redpanda-ns namespace.

If you need to access Redpanda from outside of the Kubernetes cluster, you can choose Nodeport or LoadBalancer. For most use cases, Nodeport is desired as it provides the lowest latency, fewer network hops to traverse, and a significantly lower chance of dropped TCP packets in the event of Kubernetes resource starvation. It’s also significantly less expensive than LoadBalancer if integrating Kubernetes with a third-party load balancing service or device.

NodePort will map the Redpanda container ports to ports on the individual Kubernetes nodes. You would access the brokers via the Kubernetes node hostnames. In some air-gapped environments, the firewall policy could disallow this as Kubernetes administrators may not want containers opening ports directly on the Kubernetes nodes.

If you can’t use Nodeport, LoadBalancer is your next best option. When using LoadBalancers, you assign a LoadBalancer instance to each Redpanda pod. For example, if you have a 3-node cluster, you’d need three LoadBalancers. Keep in mind that using LoadBalancer services in this way is not really load balancing. The LoadBalancer services will always route traffic directly to the same Redpanda broker. But the LoadBalancer services give each of your brokers a unique address for access from outside the perimeter of Kubernetes.

By default, NodePort will be used for managing external access when installing the Redpanda Helm Chart. Instead, use a LoadBalancer and update your Helm Chart myvalues.yaml, like in the LoadBalancer setup example shown below.

external:

type: LoadBalancer

domain: <external DNS subdomain of the Redpanda cluster>

addresses:

redpanda-lb-0

redpanda-lb-1

redpanda-lb-2Note: The addresses should be the DNS short name of each load balancer and that you should enter the DNS subdomain that will be used to access the Redpanda cluster by external clients.

Whether or not you allow Redpanda broker connectivity from outside Kubernetes, you’ll want to use Redpanda Console to help manage the Redpanda cluster. Make sure you can connect to Redpanda Console with your browser. This requires a LoadBalancer configuration as well.

Consider running multiple Redpanda Console pods to enable high availability for console access. Additionally, if you're working with the enterprise-licensed version of Redpanda, you can enable authentication directly in Console.

When testing the accessibility of Redpanda within Kubernetes, you’ll need to validate connectivity between containers within Kubernetes, as well as from outside of Kubernetes into the Redpanda pods.

To test connectivity from inside the Kubernetes environment, use the rpk topic produce and rpk topic consume commands from one of the running Redpanda containers in a pod. The easiest way to do this is to kubectl exec into the pod’s container with a shell and run the commands. You’ll need to run this from one of the Kubernetes nodes. Here's an example of testing internal connectivity:

node$ kubectl get pods -n redpanda

node$ kubectl exec --stdin --tty redpanda-0 – /bin/bash

pod$ rpk topic create my_topic

pod$ rpk topic describe my_topic

pod$ echo "test message" | rpk topic produce my_topic

pod$ rpk topic consume my_topicTo test connectivity from outside the Kubernetes environment, you can download the rpk command to your local system and work with rpk topic produce and rpk topic consume, or point a separate Kafka client to the advertised Redpanda broker addresses.

If you’re using Nodeport, this would be the host:port mapping on each Kubernetes node running a Redpanda broker pod. If you’re using the LoadBalancer configuration, this would be the loadbalancer:port mappings for each defined pod. Use kubctl get svc for each Loadbalancer service to determine its accessible IP Address or hostname, and the mapped service port for each Redpanda broker pod.

$ export REDPANDA_BROKERS=lbalancer-0:31092,lbalancer-1:31092,lbalancer-2:31092

$ rpk topic create my_topic_external

$ rpk topic describe my_topic_external

$ echo "test message" | rpk topic produce my_topic_external

$ rpk topic consume my_topic_externalSome air-gapped Kubernetes installations will restrict the privileges that containers can run with. This helps enforce a least-privilege model for security purposes. Redpanda containers attempt to run with as few privileges as possible to align with that least-privilege model. That said, the Helm chart includes an option to run a tuning pod on each Kubernetes node that runs a Redpanda service pod.

This tuning pod requires the Linux CAP_SYS_RESOURCE capability, which delegates the privilege for setting various kernel tunables.

To get the best performance from Redpanda, make sure that the tuning operations have run. In a bare-metal installation, Redpanda provides a systemd service that tunes various system and kernel parameters to prepare. This works because the whole system is generally dedicated entirely to the Redpanda service.

When running in Kubernetes, the Helm chart will run a post-install container that will attempt to tune system-level behaviors on your behalf. Because these tunings are intended to operate on the Kubernetes node’s kernel parameters, additional container privileges are required for the service.

Furthermore, take care when running in a multi-tenant environment where applications may have competing tuning requirements. One notable issue occurs when you allow Redpanda to run the tunings on your behalf. If you don’t pin the Redpanda pods to particular nodes, over time every node in your Kubernetes cluster may end up with the Redpanda tunings applied. These tunings will live during the lifetime of the node, but won’t persist between reboots.

When possible, dedicate Kubernetes nodes for the Redpanda pods and limit what other applications can run there. You can do this by applying a Kubernetes label to each Kubernetes worker node running a Redpanda broker pod. You’ll also need to set an appropriate tainting and tolerations configuration for the Redpanda pods. Check the Kubernetes documentation for assigning pods to specific nodes.

Note: If you don’t want Redpanda to tune on your behalf, we recommend applying the tunings at the Kubernetes node level and ensuring these settings persist across reboots. You can disable the tuning pod from running by setting the following in your Helm Chart myvalues.yaml.

tuning:

tune_aio_events: falseRedpanda is designed from the ground up to be cloud-native and run on modern high-CPU and NVMe/SSD-backed machines. But Redpanda on Kubernetes isn’t limited to the cloud. Every day, companies choose to run on Kubernetes environments, air-gapped from the internet, to gain more control over security, isolation, and data sovereignty. Redpanda’s single binary architecture makes it easy to support these air-gapped environments.

Interested in using Redpanda in an air-gapped Kubernetes cluster for your next streaming data use case? To ask our Solution Architects and Core Engineers questions and interact with fellow Redpanda users, join the Redpanda Community on Slack.

Subscribe to our VIP (very important panda) mailing list to pounce on the latest blogs, surprise announcements, and community events!

Opt out anytime.