Event stream processing—a detailed overview

Event stream processing

Event stream processing (ESP) is a data processing paradigm that can handle continuous event data streams in real time. Unlike traditional batch processing, ESP operates on data as it arrives, making it ideal for scenarios where speed and responsiveness are critical. It revolves around ingesting, processing, and analyzing event data streams, enabling immediate reaction to events as they are generated.

Event stream processing is increasingly crucial in a world inundated with real-time data—offering unique advantages such as real-time responsiveness and enhanced decision-making capabilities.

This guide explores event stream processing in detail, including foundational principles, key implementation strategies, best practices, and emerging trends.

Summary of event stream processing concepts

| Concept | Description |

|---|---|

| Event | An event is a change in application state, such as a completed transaction, a user click, or an IoT sensor reading. |

| Event stream | A sequence of events ordered by time. |

| Event stream processing | The continuous processing of a real-time event stream. |

| Event stream processing patterns | Event stream processing involves different data transformations for analytics like filtering, aggregation, and joins. |

| Essential considerations in event stream processing | - Scalability- Fault tolerance- State management- Integration |

How does event stream processing work?

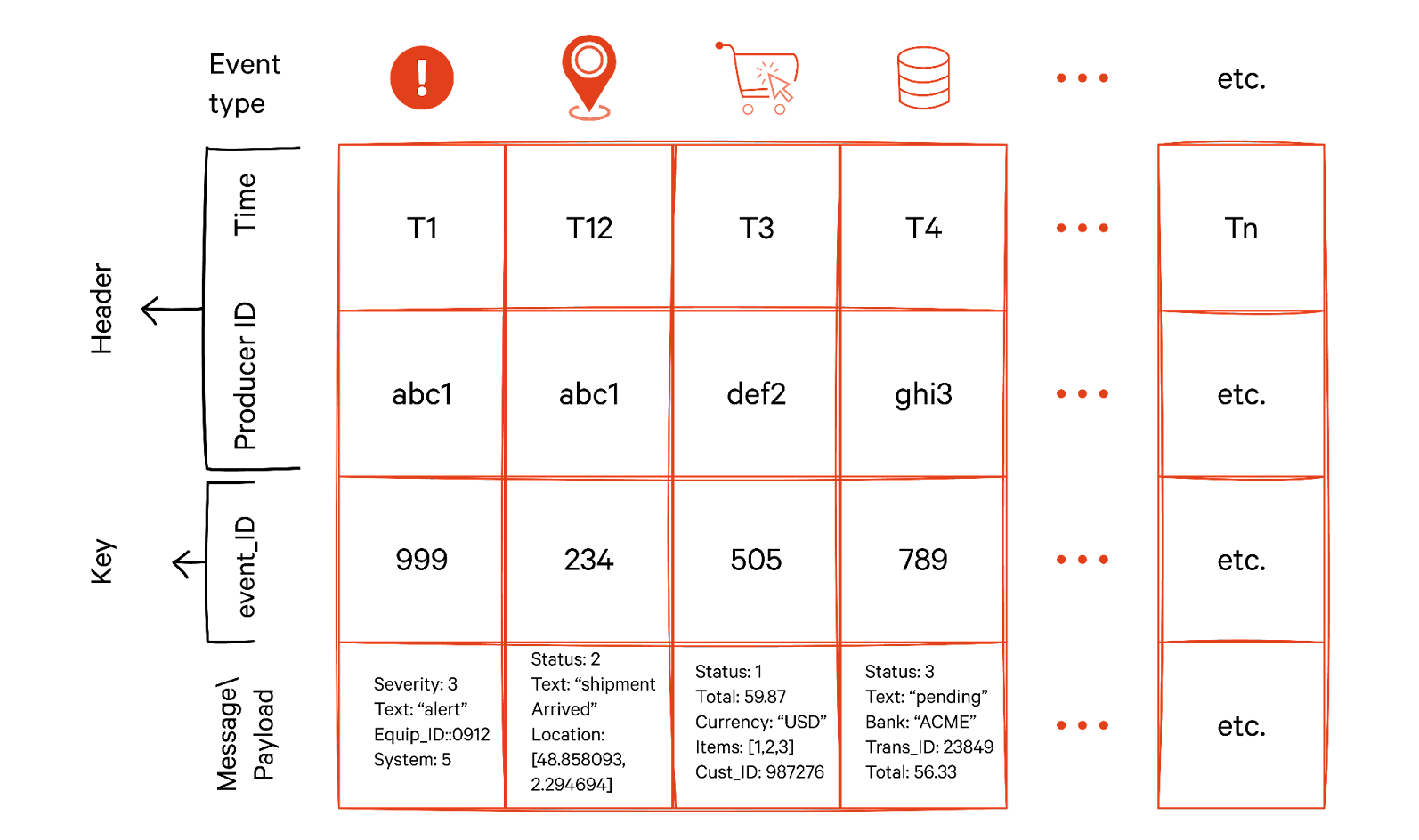

Event stream processing (ESP) is simply the continuous processing of real-time events. An event is a change in application state, such as a completed transaction, a user click, or an IoT sensor reading. An event stream is a sequence of events ordered by time. Event stream processing handles many related events together. Events are grouped, filtered, summarized, and sorted to derive real-time insights from thousands of continuous events.

Batch processing handles data in predetermined chunks or batches, typically processed during scheduled intervals. In contrast, event stream processing deals with event data in a live, ongoing manner. This distinction brings several unique advantages. For example, you can:

- Gain insights almost instantaneously as data is being generated, whereas batch processing involves inherent delays.

- Adapt to fluctuating data volumes more efficiently, whereas batch processing can be less flexible in managing data variability.

- Optimize computing resources by processing only relevant and current data, whereas batch processing might consume more resources as it processes accumulated data.

Given the potentially large and variable nature of event streams, ESP systems are designed to be scalable. They are also resilient to failures, ensuring processing continuity even during system malfunctions.

Benefits of event stream processing

Event streaming offers a multitude of advantages that are increasingly vital in today’s data-driven landscape. As organizations strive for real-time insights and responsiveness, understanding these benefits can help businesses leverage event streaming to enhance their operations and decision-making processes. Here are 6 key benefits of event stream processing:

- Real-time data processing

- Enhanced scalability

- Improved resilience and fault tolerance

- Cost efficiency

- Enhanced customer experiences

- Support for advanced analytics and machine learning

1. Real-time data processing

One of the most significant benefits of event streaming is its ability to process data in real time. Unlike traditional batch processing, which collects and processes data at scheduled intervals, event streaming allows organizations to react to data as it arrives. This immediacy is crucial for applications that require instant feedback, such as fraud detection in financial transactions or real-time analytics in e-commerce. By processing events as they occur, businesses can make timely decisions that enhance customer experiences and operational efficiency.

2. Enhanced scalability

Event streaming systems are designed to handle fluctuating data volumes seamlessly. As businesses grow and data generation increases, the ability to scale operations without compromising performance becomes essential. Event streaming platforms can dynamically allocate resources to accommodate varying loads, ensuring that systems remain responsive even during peak times. This scalability is particularly beneficial for organizations experiencing rapid growth or those with unpredictable data patterns.

3. Improved resilience and fault tolerance

In a world where data integrity is paramount, event streaming provides enhanced resilience against failures. Traditional batch processing systems can be vulnerable to data loss or corruption, especially when processing large volumes of data at once. In contrast, event streaming allows for continuous processing, meaning that if an error occurs, only the affected event needs to be retried, minimizing disruption. This fault tolerance is critical for maintaining data accuracy and reliability, especially in industries where compliance and data integrity are non-negotiable.

4. Cost efficiency

By optimizing resource utilization and reducing the need for extensive hardware, event streaming can lead to significant cost savings. Traditional batch processing often requires substantial infrastructure to handle large data sets, which can be costly to maintain. Event streaming, on the other hand, allows organizations to process only relevant data in real time, reducing the overall computational load and associated costs. Additionally, many event streaming platforms offer tiered storage solutions, enabling businesses to manage data retention more effectively and economically.

5. Enhanced customer experiences

In today’s competitive landscape, providing exceptional customer experiences is crucial for business success. Event streaming enables organizations to deliver personalized and timely interactions by analyzing customer behavior in real time. For instance, e-commerce platforms can use event streaming to recommend products based on a user’s browsing history or to adjust pricing dynamically based on demand. This level of responsiveness not only improves customer satisfaction but also fosters loyalty and repeat business.

6. Support for advanced analytics and machine learning

Event streaming serves as a robust foundation for advanced analytics and machine learning applications. By continuously feeding real-time data into analytical models, organizations can gain deeper insights and make more informed predictions. This capability is particularly valuable in sectors such as finance, healthcare, and marketing, where understanding trends and patterns can lead to competitive advantages. The integration of event streaming with machine learning frameworks allows businesses to automate decision-making processes and enhance operational efficiency.

When to use event streaming vs. batch processing

Understanding when to use event streaming versus batch processing is essential for optimizing data workflows and achieving business objectives. Each approach has its strengths and is suited to different scenarios. This includes:

- Nature of the data

- Speed of response

- Resource availability

- Use case specificity

1. Nature of the data

The first consideration is the nature of the data being processed. If the data is generated continuously and requires immediate action—such as user interactions on a website, sensor data from IoT devices, or financial transactions—event streaming is the ideal choice. This approach allows organizations to capture and respond to events in real time, ensuring they remain agile and competitive.

Conversely, if the data is collected in large volumes but does not require immediate processing—such as historical sales data or monthly performance reports—batch processing may be more appropriate. Batch processing can efficiently handle large datasets at scheduled intervals, making it suitable for tasks like generating reports or conducting periodic analyses.

2. Speed of response

In scenarios where speed is critical, event streaming is the clear winner. Businesses that operate in fast-paced environments, such as stock trading or online gaming, benefit from the ability to process events as they happen. This immediacy allows organizations to capitalize on fleeting opportunities and respond to market changes swiftly.

On the other hand, if the application can tolerate some latency—such as end-of-day reporting or data aggregation for analytics—batch processing can be a more efficient and cost-effective solution. It allows for the accumulation of data over time, which can then be processed in bulk, reducing the overhead associated with continuous processing.

3. Resource availability

Resource availability is another crucial factor in deciding between event streaming and batch processing. Event streaming systems often require a more sophisticated infrastructure to handle real-time data flows, which may necessitate a higher initial investment in technology and expertise. Organizations with the resources to implement and maintain such systems will find that the benefits of event streaming far outweigh the costs.

In contrast, batch processing can be a more accessible option for organizations with limited resources or those just starting their data journey. It allows businesses to implement data processing capabilities without the need for extensive infrastructure, making it a practical choice for smaller organizations or those with less complex data needs.

4. Use case specificity

Finally, the specific use case should guide the decision. For applications that demand real-time insights, such as fraud detection, customer engagement, or operational monitoring, event streaming is essential. It enables organizations to act on data as it flows, providing a competitive edge in fast-moving markets.

For use cases that involve periodic analysis or reporting, such as financial audits or compliance checks, batch processing is often sufficient. It allows organizations to compile and analyze data at regular intervals, ensuring that they meet regulatory requirements without the need for constant monitoring.

Practical use cases

Event stream processing finds applications in various industries and scenarios where immediate data analysis is crucial. For example:

- In finance, ESP is used for fraud detection and high-frequency trading, where milliseconds can make a significant difference.

- Social media platforms use ESP for real-time content recommendation and sentiment analysis based on live user activity.

- In IoT, ESP is used for immediate responses in smart home systems, industrial monitoring, and telematics.

- Real-time patient monitoring systems utilize ESP for alerting and health data analysis.

- ESP aids in tracking logistics in real-time, ensuring efficient movement of goods and identifying potential delays immediately.

[CTA_MODULE]

Event stream processing patterns

Event stream processing involves different data transformations for analytics. We give some common patterns below.

Aggregation

Aggregation in stream processing involves summarizing event data over a specified time window. This can include calculating averages, sums, or counts. For example, an e-commerce platform might use aggregation to calculate the total sales volume every hour. Some ways of aggregating streaming data include:

- Hopping window aggregation is defined by a set time window but moving start and end times.

- Session window aggregation is defined by the idle time between two events.

You can also implement tumbling window aggregation where both the time window and the advance window are defined and of equal sizes, meaning that an event will only ever land up in one window and not more.

Filtering

Filtering in stream processing selectively excludes data from processing based on specific criteria. It's often used to reduce data volume and increase processing efficiency. For instance, in financial transactions, filtering might remove transactions below a certain value to focus on higher-value transactions for fraud analysis. This technique is often combined with other patterns like aggregation, windowing, and preprocessing for more effective data analysis.

Joins

Joins allow you to combine your event stream with another event stream or with static datasets for additional context. This pattern is beneficial in scenarios like augmenting real-time user activity streams with static user profile information to personalize content or ads.

Windowing joins apply temporal constraints to data joins in stream processing. By specifying time windows, this pattern allows for the correlation of events that occur within a specific timeframe, such as matching customer orders with inventory changes within a five-minute window.

You can learn more about stream processing patterns on the Redpanda blog.

Essential considerations in event stream processing implementation

An event stream processing engine is a software system designed to handle and process large event streams. Selecting an appropriate ESP engine is critical. Factors like data throughput, latency requirements, and compatibility with existing systems guide this choice.

Popular engines such as Apache Flink® can handle high-throughput scenarios with low-latency processing capabilities. The stream processing engine typically uses a real-time data ingestion tool like Redpanda for enhanced efficiency.

Implementing event stream processing involves several key considerations, each contributing to the system's overall effectiveness and efficiency.

Scalability

A well-designed ESP system must scale with fluctuating data volumes and maintain consistent performance. Scalability can be achieved through techniques like dynamic resource allocation and load balancing. Using cloud-based solutions or distributed architectures allows for elasticity – the ability to scale resources up or down as needed. This scalability is critical for handling unpredictable data volumes efficiently.

Fault tolerance

Equally important is the system's ability to continue functioning despite failures. Implementing redundancy, checkpoints, and failover mechanisms are standard practices to ensure uninterrupted processing.

Strategies like retry logic and setting up dead-letter queues for unprocessable messages ensure that the system remains operational and no data is lost in case of failures. Implementing validation checks, anomaly detection mechanisms, and data quality assessments keeps data accurate and reliable.

State management in event streaming

Managing state in ESP – keeping track of previous events to make sense of the new ones – is challenging yet essential, especially in systems dealing with complex transactions or analytics. Effective state management ensures data consistency across the system, enabling accurate and reliable processing of event streams.

Integration with existing data infrastructure

Integrating ESP systems with existing data infrastructure is vital for seamless operations. This involves ensuring compatibility with current data storage solutions, analytics tools, and other software systems. The integration strategy should prioritize minimal disruption to existing workflows while maximizing the benefits of real-time data processing.

[CTA_MODULE]

Best practices in event stream processing

For new engineers venturing into ESP, starting with a solid understanding of the fundamental concepts of stream processing is important. Hands-on experience with different tools and systems, continuous learning, and staying updated with ESP's latest trends and best practices are crucial for professional growth in this field. The below best practices ensure that the ESP system is functional, efficient, and secure.

Monitor for data quality

Continuous monitoring of the ESP system is essential for maintaining its health and performance. Data quality assessments ensure that the data being processed is accurate and reliable. Regular audits and consistency checks help preserve the integrity of the data throughout the processing pipeline. Tools that provide real-time metrics and logs can also be used to monitor system performance. Based on these insights, performance tuning ensures the system operates optimally.

Ensure security at every stage

Security and privacy considerations are also at the forefront, particularly when handling sensitive information. Encrypting data streams, implementing strict access controls, and adhering to data protection regulations safeguard the integrity and confidentiality of the data processed.

Optimize resource utilization

Efficient resource utilization is vital for the cost-effective operation of ESP systems. Practices like optimizing data flow paths, reducing data redundancy, and choosing the right processing resources help minimize operational costs while maximizing system efficiency.

Balance throughput with latency

High throughput ensures quick processing of large data volumes, while low latency focuses on the rapid processing of each individual data item. In ESP, processing data in real time with minimal latency is crucial. Techniques like in-memory processing, stream partitioning, and optimizing query mechanisms are key. However, latency cannot come at the cost of throughput. Optimizing system configurations, like adjusting batch sizes and employing asynchronous processing, can effectively manage this balance.

Use a data ingestion platform

The diversity of data sources and formats introduces complexity in event stream processing. Integrating adaptable ingestion platforms like Redpanda and employing middleware solutions can streamline handling various data formats, ensuring smooth data flow into the system.

Latest trends in event stream processing

The landscape of event stream processing is continually evolving, influenced by technological advancements and emerging industry needs. Let us explore some trends and developments in this space.

Cloud-based stream processing

Cloud-based solutions have revolutionized event stream processing by cost-effectively offering scalability and flexibility. Major cloud service providers, such as AWS with Kinesis, Azure Stream Analytics, and Google Cloud Dataflow, provide robust environments for handling massive data streams.

These platforms allow businesses of all sizes to implement sophisticated event stream processing capabilities without extensive on-premise infrastructure. They offer key advantages like elasticity to handle variable data loads, built-in fault tolerance, and integration with other cloud services.

Advanced analytics and AI

One of the most exciting developments in event stream processing is its integration with AI and advanced analytics. This synergy enables more sophisticated data analysis techniques like predictive modeling and real-time decision-making.

For instance, streaming data can be fed into machine learning models to detect patterns or anomalies as they occur. This integration is invaluable in fraud detection in financial services or real-time personalization in e-commerce. Processing and analyzing data in real time empowers businesses to make more informed and timely decisions.

[CTA_MODULE]

Conclusion

Event stream processing enables real-time data analysis and decision-making, processing continuous event streams instantaneously. This technology is essential for businesses and organizations that require immediate insights and actions based on live events.

Event stream processing frameworks like Flink work well with real-time data ingestion platforms like Redpanda. Redpanda’s design for high performance, cloud compatibility, and ease of use makes it an attractive option for companies looking to adopt modern event stream processing capabilities.

Recently, the introduction of Data Transforms Sandbox marked a paradigm shift in how you use Redpanda—from the infrastructure for fast and cost-efficient data ingestion to an integrated platform running everyday data processing tasks. The framework to build and deploy inline transformations (data transforms) on data directly within Redpanda is now available as a Beta feature.

Event streaming FAQs

1. What types of events can be processed using event streaming?

Event streaming can handle a wide variety of events, including user interactions (like clicks and purchases), system logs, sensor data from IoT devices, financial transactions, and more. Essentially, any significant change in state or condition can be captured and processed in real time.

2. How does event streaming improve data accuracy?

Event streaming enhances data accuracy by processing events as they occur, which reduces the risk of data loss or corruption that can happen during batch processing. By addressing each event individually, organizations can implement immediate validation checks and error handling, ensuring that only accurate data is processed and stored.

3. Can event streaming be integrated with existing data systems?

Yes, event streaming can be seamlessly integrated with existing data systems. Many event streaming platforms, including Redpanda, offer compatibility with various data storage solutions, analytics tools, and other software systems. This integration allows organizations to enhance their current workflows without significant disruptions.

4. How can businesses measure the success of their event streaming initiatives?

Businesses can measure the success of their event streaming initiatives through key performance indicators (KPIs) such as reduced latency in data processing, improved response times to events, increased customer engagement metrics, and cost savings from optimized resource utilization.

5. How can organizations ensure the security of their event streaming data?

Organizations can enhance the security of their event streaming data by implementing encryption for data in transit and at rest, establishing strict access controls, and regularly auditing their systems for vulnerabilities.

[CTA_MODULE]