Kafka consumer lag—Measure and reduce

Kafka consumer lag

Apache Kafka® is an open-source distributed streaming platform that implements applications that rely on real-time event processing and durable event storage. Event processing-based application architecture helps architects to decouple application components and scale them independently. While decoupling helps in scalability and resilience, it also makes the architecture complex to debug.

Optimizing performance for distributed applications requires considerable engineering effort and Kafka is no different. Kafka consumer lag — which measures the delay between a Kafka producer and consumer — is a key Kafka performance indicator. This article explores Kafka consumer lag in detail, including causes, monitoring, and strategies to address it.

Summary of key Kafka consumer lag concepts

Kafka consumer lag is a key performance indicator for the popular Kafka streaming platform. All else equal, lower consumer lag means better Kafka performance. The table below summarizes common causes of Kafka consumer lag. We will explore these causes in more detail later in this article.

What is Kafka consumer lag?

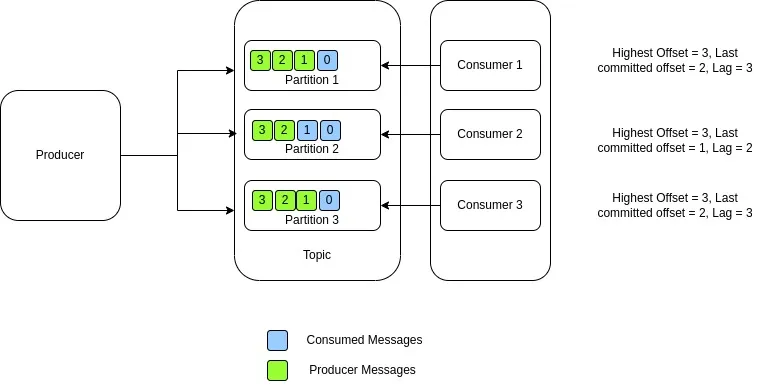

Kafka consumer lag is the difference between the last message produced by the producer and the offset committed by the consumer group. It represents the consumer processing delay.

Understanding the Kafka working model

Kafka excels in acting as a foundation for building decoupled applications that rely on event processing. It does its magic through the concept of producers and consumers. Producers are applications that send events to the Kafka broker. The broker stores the messages durably and enables the client applications to process the events logically.

Data is logically separated inside brokers using Kafka topics. Topics are categories that logically separate data so it can be uniquely addressed. Topic names are unique across a Kafka cluster. Topics are further divided into partitions to facilitate scaling. Partitions keep a subset of data belonging to a topic.

When a producer writes a message to a topic, Kafka broker writes into a partition that belongs to the topic. Kafka maintains the progress of writing data to each partition by tracking the last position of each data write. This position is called long-end offset. It is a partition-specific offset.

Consumers contain application logic about how to process the data written to partitions. To facilitate scaling within consumers, Kafka uses the concept of consumer groups. A consumer group is a set of consumers collaborating to consume messages from the same topic. Kafka ensures consumers belonging to the same consumer group receive messages from different partitions.

When a new consumer joins the group, Kafka rebalances the members in that consumer group to ensure that the new consumer gets a fair share of assigned partitions. Every rebalance operation results in new group configurations. Group configuration here means the assignment of consumers to various partitions.

Kafka message processing can be scaled by adding more consumers to a consumer group. To enable resilience, Kafka consumers keep track of the last position in a partition from where it is read. This helps consumers to begin again from the position they left off in case of unfortunate situations like crashes. This is called consumer offset. Consumer offset is stored in a separate Kafka topic.

The difference between the last offset stored by the broker and the last committed offset for that partition is called consumer lag. It defines the gap between consumers and producers. Consumer lag provides information about the real-time performance of the processing system. A positive value of consumer lag often flags up a sudden spike in traffic, skewed data patterns, a scaling problem, or even a code-level issue.

[CTA_MODULE]

Reasons for Kafka consumer lag

Consumer lag can occur because of several internal and external factors. Even a healthy Kafka cluster will have some consumer lag at times. As long as the lag goes down in a reasonable time, there is nothing to worry about. The lag becomes alarming when it does not decrease or show signs of a gradual increase.

Incoming traffic surge

Traffic patterns often vary through a wide range based on external patterns. For example, imagine an IoT sensor system that sends alerts based on specific external environment variables. A change in the external environment for a set of customers can flood the topic with sudden spikes. Consumers will have difficulty dealing with the sudden spike, and the lag can become alarmingly high. Manual scaling helps address Kafka consumer lag in these cases.

Data skew in partitions

Partitions bring parallelism to Kafka. Consumers within a consumer group are mapped to specific partitions. The idea is that each consumer has enough resources to handle messages coming to that partition. But data is often not uniformly distributed in partitions. Kafka provides multiple strategies to select partitions while writing data. The simplest is robin assignment, where data is uniformly distributed. But round robin is unsuitable for applications that maintain state or order. In such cases, an application-specific partition key is used.

If the partition key does not distribute data uniformly, some partitions can have more data than others. Imagine a unique customer identity is mapped to a partition key. If a specific customer sends more data than others, that partition will experience a skew leading to consumer lag.

Slow processing jobs

Consumers process the messages pulled from the partitions according to application logic. Application logic can contain tasks like complex data transformations, external microservice access, database writes, etc. Such processing mechanisms are time-consuming and can get stuck due to external factors. Imagine a consumer that accesses an external microservice to complete its task. If the response time of the external service increases because of other factors, Kafka will experience consumer lag.

Error in code and pipeline components

Kafka consumers often contain complex application logic. Like any code, that logic can have bugs. For example, a processing module can go into an infinite loop or use inefficient algorithms. Similarly, improper handling of an erroneous or unexpected input message can slow a particular consumer. Such instances will result in consumer lag.

[CTA_MODULE]

Monitoring Kafka consumer lag

Monitoring Kafka consumer lag helps developers take corrective actions to stabilize the cluster and optimize performance. Typically, there will always be a lag because batching and lag values vary from partition to partition. Slight lag is not a significant problem if it is stable. But lag with a tendency to increase points to a problem. This section details how teams can monitor consumer lag to identify potential issues.

Monitoring Kafka consumer lag with the consumer group script

The Kafka consumer group script exposes key details about the consumer group performance. It details each partition’s ‘current offset’, ‘log end offset’, and lag. The ‘current offset’ of a partition is the last committed message of the consumer dealing with that partition. The ‘log end offset' is the highest offset in that partition. In other words, it represents the offset of the last message written to that partition. The difference between these two is the amount of consumer lag.

You can use the command below to get consumer lag details with the consumer group script.

$KAFKA_HOME/bin/kafka-consumer-groups.sh --bootstrap-server <> --describe --group <group_name>Executing this in a live server will result in the below output.

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG OWNER

ub-kf test-topic 0 15 17 2 ub-kf-1/127.0.0.1

ub-kf test-topic 1 14 15 1 ub-kf-2/127.0.0.1In the above example, you can see lag values varying from partition to partition.

Monitoring Kafka consumer lag with Burrow

Burrow is an open-source monitoring companion for Kafka. It can monitor the committed consumer offsets and provide reports without the developers having to specify threshold values.

Burrow calculates lag based on a sliding window. It is written in Go and will require a separate installation or container external to the Kafka cluster.

Burrow exposes an API endpoint that provides information on all the consumer groups it monitors. It also supports sending alerts via email and Burrow's configurable HTTP client can integrate its alerts with other services.

Monitoring Kafka consumer lag with exporters and generic monitoring tools

Kafka consumer lag is just like any other system metric, and generic monitoring tools like Grafana or Prometheus can track them. A working exported module that collects metrics from Kafka and sends them to the monitoring tool’s backend is required. There are multiple open-source Kafka exporters available to do this job.

A popular Kafka exporter module is the Kafka Lag Exporter. It can run anywhere, including Kubernetes clusters or as a Java application. Kafka Lag Exporter can estimate a lag time value by exploiting the consumer lag information. It is written in Scala.

Another popular exporter is the Kafka Exporter. It is written in Go and can export consumer lag information to Prometheus or Grafana.

Strategies for addressing Kafka consumer lag

There is no one-size-fits-all way to address Kafka consumer lag. The best optimization technique depends on the underlying cause. Below are four strategies that can help address Kafka consumer lag.

Optimizing consumer processing logic

The most straightforward solution to solve the consumer lag problem is to identify the bottleneck in the processing logic and make it more efficient. Of course, this is easier said than done. A possible approach is to identify any synchronous blocking operation in the code and make it asynchronous if the use case allows it.

Modifying partition count

Partition assignment is a crucial factor that affects consumer lag. A situation where only one partition’s lag is higher than others points to a problem in the data distribution. This can be solved using a custom partition key or adding more partitions. The configuration of partitions and consumers will depend on the use case.

Using an application-specific queue to limit rates

Sometimes the processing logic is a time-consuming operation and can't be optimized. In these cases, developers should consider using an application-specific queue so that consumers can just add messages to the application queue. Once the messages are pushed to the queue, the consumer can wait till they are processed before accepting new messages from Kafka. The second queue will help to divide the messages from a single partition among multiple processing instances.

Tuning consumer configuration parameters

Developers can use various consumer parameters to adjust how frequently consumers pull messages from partitions or how much data is fetched in one operation. The parameter ‘fetch.max.wait.ms’ controls the time the consumer waits before accepting new data from the partition. The ‘fetch.max.bytes’ and ‘fetch.min.bytes’ parameters control the maximum and minimum amount of data fetched by consumers in one operation.

Another critical element of reducing consumer lag is to minimize rebalancing operations as much as possible. Each rebalance operation blocks the consumers for some time and can increase the consumer lag. The parameters ‘max.poll.interval.ms’, ‘session.timeout.ms’, and ‘heartbeat.interval.ms’ control the situations where the broker decides that a consumer has been inactive for too long and triggers a rebalance operation. Tuning these values can help in reducing rebalance operations.

[CTA_MODULE]

Conclusion

Consumer lag is a key metric that provides information about the extent of catchup consumers must do to achieve near real-time operation. While a little bit of consumer lag is inevitable, an increasing consumer lag points to a problem in data distribution, code, and traffic patterns.

[CTA_MODULE]