Kafka monitoring—Tutorials and best practices

Kafka monitoring

Apache Kafka® is a distributed streaming platform for large-scale data processing and streaming applications. Kafka monitoring is the process of continuously observing and analyzing the performance and behavior of a Kafka cluster. Monitoring is critical to ensure they run smoothly and optimally, especially in production environments where downtime and delays can have serious consequences.

Monitoring Kafka typically entails keeping track of critical metrics like message throughput, latency, broker resource utilization, and consumer lag. You can do this with various monitoring tools and techniques, such as Kafka's built-in metrics reporting, third-party monitoring solutions, and custom scripts or dashboards.

This article explores vital metrics in Kafka monitoring. We also share tutorials on Kafka monitoring using two popular tools—Grafana and Prometheus—and end with some handy best practices.

Summary of key Kafka monitoring concepts

Metrics in Kafka monitoring

You can monitor several aspects of Kafka to analyze and improve Kafka performance.

Consumer lag

Monitor consumer lag to ensure consumers keep up with message production. High consumer lag indicates issues with the consumer or the Kafka cluster.

Broker resource utilization

Keep track of broker resource utilization, such as CPU and memory usage. High resource utilization indicates that the Kafka cluster requires additional resources to function correctly.

Topic and partition metrics

Topics and partitions are key Kafka concepts that help organize and distribute data within a Kafka cluster. Monitoring metrics like the number of messages produced, consumed, and lagging can assist you in identifying any problems with data processing or consumer performance.

Apache ZooKeeper™ metrics

ZooKeeper is a critical component of a Kafka cluster responsible for cluster state and configuration. Monitoring ZooKeeper metrics such as latency, connection errors, and outstanding requests can aid in the detection of cluster coordination and management issues.

[CTA_MODULE]

Advanced Kafka monitoring

Beyond the basic metrics, advanced Kafka monitoring involves monitoring the more nuanced and intricate features of Kafka. We give some examples below.

Replication and failover monitoring

Monitoring replication and failover mechanisms are critical for ensuring the high availability and reliability of Kafka clusters. You ensure that replicas are in sync, detect out-of-sync replicas, and monitor the replication and failover systems' performance.

Network monitoring

Network monitoring tracks the network infrastructure that connects Kafka brokers and customers. Monitoring network latency, packet loss, and capacity utilization are all part of this.

Performance monitoring

Advanced performance monitoring tracks Kafka's performance over time and discovers performance patterns and anomalies. Monitoring parameters such as broker CPU, memory consumption, disc usage, and message throughput is required.

Third-party tools for Kafka monitoring

There are several third-party tools that can input Kafka metrics and provide visual reports and alerts for Kafka users. Here are some examples:

- Confluent Control Center

- Prometheus

- Grafana

- Datadog

- New Relic

- Splunk

When choosing a tool, it's important to consider factors like ease of use, scalability, integrations, and cost. Next, we give tutorials for the two most popular Kafka monitoring tools: Grafana and Prometheus.

Kafka monitoring with Prometheus

By following the steps given below, you can monitor Kafka and set up alerts for key Kafka metrics using Prometheus and AlertManager. You can customize the configuration to monitor and alert on other metrics based on your specific requirements.

Install Kafka Exporter

Kafka Exporter is the module that exports metric data to Prometheus. To collect metrics from Kafka, you must install and configure it. You can download the Kafka exporter for Prometheus from GitHub. After you've installed Kafka Exporter, you'll need to tell Prometheus to scrape metrics from it. You can do this by including the following configuration in the Prometheus configuration file:

yaml

Copy code

scrape_configs:

- job_name: 'kafka_exporter'

static_configs:

- targets: ['<kafka_exporter_host>:<kafka_exporter_port>'] with the hostname and port of your Kafka exporter.

Install and configure Prometheus to collect Kafka metrics

Add the following job configuration to your prometheus.yml file.

- job_name: 'kafka'

scrape_interval: 10s

static_configs:

- targets: ['kafka-broker-1:9092', 'kafka-broker-2:9092', 'kafka-broker-3:9092']

metrics_path: /metricsThis configuration sets up a job named “Kafka” that scrapes metrics from three Kafka brokers every 10 seconds.

Set up alerts using AlertManager

You can install and configure AlertManager to send alerts from Prometheus. For example, add the following configuration to your alertmanager.yml file:

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_wait: 10s

group_interval: 5m

repeat_interval: 3h

receiver: 'email'

receivers:

- name: 'email'

email_configs:

- to: 'your.email@example.com'

from: 'alertmanager@example.com'

smtp_auth_username: 'your.username@example.com'

smtp_auth_password: 'yourpassword'

smtp_host: 'smtp.example.com:587'

smtp_require_tls: trueThis configuration sets up an email receiver that sends notifications to your.email@example.com.

Create alert rules in Prometheus for Kafka

You can create alert rules in Prometheus to trigger alerts based on Kafka metrics. For example, add the following rule configuration to your prometheus.yml file:

rule_files:

- '/path/to/rules.yml'And create rules.yml file with the following rule:

groups:

- name: kafka-alerts

rules:

- alert: HighCPUUsage

expr: sum by (instance) (rate(process_cpu_seconds_total{job="kafka"}[5m])) > 0.8

for: 1m

labels:

severity: warning

annotations:

summary: "High CPU usage on Kafka broker {{ $labels.instance }}"

description: "CPU usage on Kafka broker {{ $labels.instance }} is currently {{ $value }}"This configuration sets up a rule that triggers an alert if the CPU usage on a Kafka broker exceeds 80% for at least one minute.

Start Prometheus and AlertManager

Start Prometheus and AlertManager using the following commands:

$ prometheus --config.file=prometheus.yml

$ alertmanager --config.file=alertmanager.yml[CTA_MODULE]

Kafka monitoring with Grafana

Grafana is another popular third-party tool that you can use to monitor your Kafka metrics. Some reasons why Grafana may be a good choice for Kafka monitoring include:

- User-friendly interface

- Integration with other systems

- Large community and ecosystem

- Comprehensive data visualization

Grafana gives you detailed graphical reports and also lets you set alerts and notifications. By following the steps below, you can set up Grafana for Kafka monitoring and visualize Kafka metrics in real time.

Install and configure Grafana

You need to install Grafana to visualize Kafka metrics. Download Grafana, install it, then configure it to connect to Prometheus. You can do this by adding Prometheus as a data source in Grafana.

Create a dashboard in Grafana

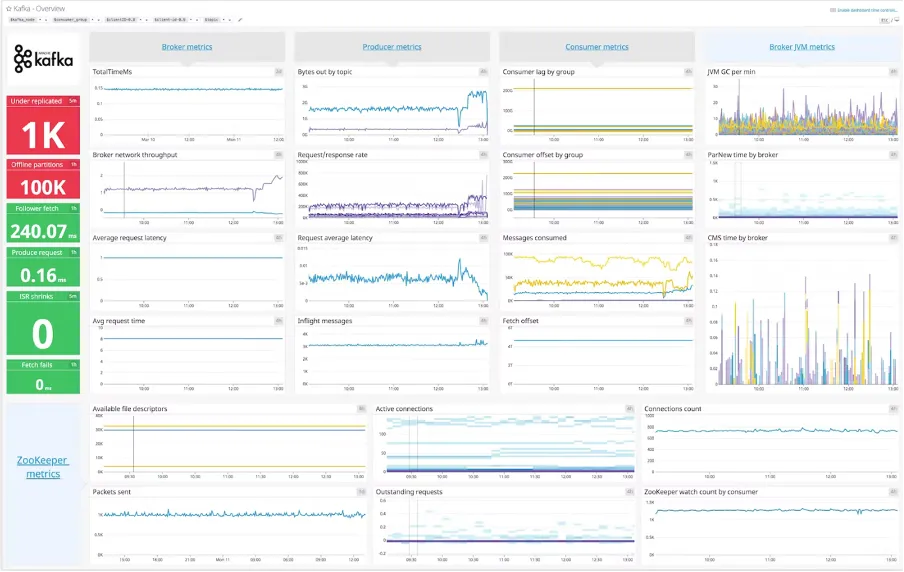

Once you have configured Grafana to connect to Prometheus, you can create a Kafka dashboard to monitor Kafka logs. You can create a new dashboard and add panels for the Kafka metrics you want to monitor, such as broker, topic, and consumer group metrics. The dashboard that connects Kafka and Grafana typically features a design similar to the one shown below.

Configure alerts

You can configure alerts in Grafana to notify you when Kafka metrics exceed certain thresholds. You can create alert rules based on the Kafka metrics you are monitoring.

Best practices for Kafka monitoring

Monitoring Kafka clusters is a continuous process, and it is critical to review the monitored metrics regularly to identify potential issues and optimize performance. Here are some best practices for Kafka monitoring:

Define metrics to monitor

Define a set of key metrics that need to be monitored, such as message throughput, consumer lag, and broker resource utilization. These metrics should align with the SLOs and SLAs of your Kafka cluster. Set up alerts for critical metrics to detect issues before they become severe. Alerts can be sent via email, SMS, or a messaging platform like Slack.

Monitor Kafka logs

Kafka logs contain vital information about the Kafka cluster's health and condition. You can collect log data from Kafka brokers and topics to monitor broker health, topic and partition status, message throughput, latency, and errors. Logs help you identify and resolve problems such as delayed message processing, high message drop rates, and broker failures.

Kafka also offers a Log4j appender for sending log data from Kafka clients to a Kafka topic. You can use it to track client activity and detect errors. You can use tools like Elasticsearch, Logstash, Kibana (ELK stack), Confluent Control Center, or Datadog for log monitoring.

Review metrics regularly

Review the monitored metrics regularly to ensure that the Kafka cluster is meeting SLOs and SLAs. For example, you can create custom dashboards to visually monitor the Kafka cluster's performance. Dashboards can assist operators in quickly identifying and troubleshooting issues, and help them make informed decisions based on the reviewed data to improve the Kafka cluster's performance.

Perform regular capacity planning

Capacity planning ensures the Kafka cluster has enough resources to run optimally. You should perform capacity planning regularly to ensure that the Kafka cluster handles message volume changes and other performance requirements.

[CTA_MODULE]

Conclusion

Monitoring Kafka clusters means you can ensure optimal performance, availability, and reliability. Operators and developers can detect issues early, troubleshoot issues, and optimize Kafka cluster performance. Monitoring Kafka clusters can be done in various ways, including using Kafka's built-in metrics, third-party monitoring solutions, custom scripts, dashboards, log monitoring, and alerting.

It is critical to define a set of key metrics to monitor, set up alerts, regularly review metrics, and perform capacity planning to ensure effective Kafka monitoring.

[CTA_MODULE]

.png)