Kafka console consumer: Tutorial & examples

Kafka console consumer

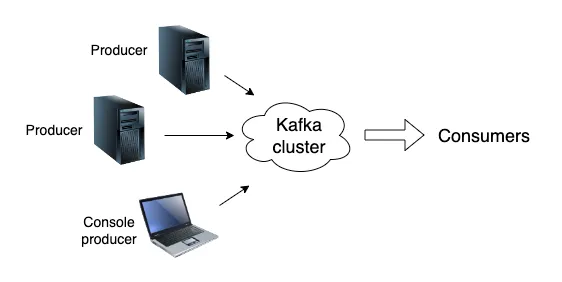

Kafka makes it easy to connect data sources with applications that want to use that data. Instead of creating custom integrations between producers and consumers of data, both integrate with Kafka. This way, you don’t need to fret over exactly what kind of application is on the other side of Kafka.

In addition to connecting applications to Kafka via code, you can also use the Kafka CLI (command line interface) to produce or consume data. Setting up a consumer via the Kafka console is straightforward. This article will show you how to use the Kafka console consumer in realistic situations using the kafka-console-consumer command-line tool. Additionally, we’ll share some tips and best practices for getting the most out of the console consumer.

Note: you can also find less detailed examples a Kafka consumer on the console from the official Kafka docs.

Summary of use cases

Kafaka Console Consumer is beneficial in the following scenarios.

Understanding the Kafka console consumer

A consumer in Kafka receives data sent to a topic. Consumers are typically software applications, but Kafka allows you to manually create a consumer on the command line using the Kafka console consumer.

This manual terminal access is useful for various reasons, such as testing, debugging and more. Let’s explore these use cases before looking through some broad recommendations for using the console consumer well.

[CTA_MODULE]

Use cases

The best way to get to know the Kafka console consumer is to see it in use. Ideally, you’ll want to see it used in a realistic context. So let’s look through the situations where the console consumer shines.

To make it easier for you to follow, here are some instructions for setting up Kafka on your machine. We’ll use instructions for macOS, but you can find equivalent install instructions for Ubuntu in the lead article for this series: Kafka Tutorial.

Let’s start by installing Kafka.

$ brew install kafka

Installing Kafka 3.3.1_1

...For Kafka to work, it needs a consensus mechanism for the cluster. We will skip a full explanation of consensus mechanisms for the scope of this article.

Currently, Kafka uses ZooKeeper (although this is changing, see: Kafka without ZooKeeper). If you’re using Redpanda, you don’t need to worry about this step because Redpanda doesn’t rely on ZooKeeper.

Before we start Kafka, we’ll run ZooKeeper.

$ zookeeper-server-start /opt/homebrew/etc/kafka/zookeeper.properties

[2022-12-02 15:49:56] INFO Reading config from: zookeeper.properties

[2022-12-02 15:49:56,946] INFO clientPortAddress is 0.0.0.0:2181

...Now we’re finally ready to start up Kafka.

$ kafka-server-start /opt/homebrew/etc/kafka/server.properties

[2022-12-02 15:50:59,005] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

...To consume data, we will need to produce data. In turn, to produce data, we’d need a topic to produce on. So let’s create a topic.

$ kafka-topics \

--bootstrap-server localhost:9092 \

--topic example-topic \

--create

Created topic example-topic.Great, now that we’ve got Kafka running and a topic ready to produce to and consume from, we can get started showing you some more realistic use cases.

Testing

After you set up Kafka, you want to try it out and see if the cluster is working as expected. To start, let’s produce some example data on our topic.

$ kafka-console-producer \

--topic example-topic \

--bootstrap-server localhost:9092

>Let’s see if we can consume this!

>^CNow we can finally see the Kafka console consumer in action by trying to consume the data we just produced.

$ kafka-console-consumer \

--bootstrap-server localhost:9092 \

--topic example-topic

Let’s see if we can consume this!

Processed a total of 1 messagesThere we go! We’ve seen the console consumer in action, but only in a trivial use case. Let’s move on to more interesting examples.

Shell scripting

If you’re programming in a shell scripting language, the CLI is the easiest way to interact with Kafka via your code. To demonstrate this, imagine you are working on a PowerShell script snippet that ensures the system is not under a distributed denial of service (DDoS) attack.

The script checks if there are over 500 current connections. If so, it uses the console consumer to display messages on the ddos-monitor topic.

$Conns = (Get-NetTCPConnection).count

if ($Conns -gt 500) {

kafka-console-consumer \

--bootstrap-server localhost:9092 \

--topic ddos-monitor

}If not for the console producer, this would require an extra step. We’d need a separate script in a language with a Kafka SDK. Then we could have PowerShell call that script.

Debugging

The Kafka console consumer gives us a candid look at data coming into a topic, which makes it a fantastic tool for debugging. For example, imagine we were to write the following simple Kafka producer in Python to send the last line of the Apache log file.

from kafka import KafkaProducer

from datetime import datetime

producer = KafkaProducer(bootstrap_servers='localhost:9092')

apache_log_file = open('/var/log/httpd.log')

with apache_log_file.readlines[1] as apache_log_last:

producer.send('example-topic', apache_log_last.encode())

now = datetime.now()

date_string = now.strftime('%m/%d/%Y')

print(f'Log tail produced successfully ({date_string})')The code above has a bug. Let’s run the code and open the Kafka console consumer to see what might happen.

$ python send_log_end.py

Log tail produced successfully (01/12/2022)

$ kafka-console-consumer \

--bootstrap-server localhost:9092 \

--topic example-topic

GET /store?id=12 201 March 3rd, 1996

Processed a total of 1 messagesWe can see the log entry that our Python script sent to Kafka.

Then we can check where in the log this line appears.

$ grep -n "GET /store?id=12 201 March 3rd, 1996" /var/log/httpd.log

2According to the grep command, this is not the last line of the file, but the second line! So what’s going wrong? We’re using the index 1, which gives us the second line, rather than -1, which would provide us with the last!

Of course, there are infinite ways in which looking directly at the data arriving for consumers of a topic can help you with debugging.

[CTA_MODULE]

Recommendations

Merely understanding how the Kafka console consumer works are only the beginning. The wisdom required to use these tools properly comes from hard-earned experience. So let’s look at some tips for using the console consumer like a pro.

Kafka console consumers in production

Engineers should treat production systems with extreme care. When making a change to production, you want to follow formal processes that include essential steps like automated testing and code review.

For tools like the Kafka console producer, tinkering with production via the CLI should be avoided in almost all cases. The console consumer, however, is a different story. Because the Kafka console consumer doesn’t alter the data in production, it’s a relatively harmless tool to use in production as long as you consume a consumer group not used by other consumers.

Navigating the docs

When you’re trying to learn the Kafka console consumer or any part of the Kafka console ecosystem, you will need to contend with the documentation. Where should you go for official guidance on using these tools correctly? The Kafka console’s official documentation comes primarily from two sources:

- The Apache Foundation’s official docs: https://kafka.apache.org/quickstart

- The command line help options.

You can find the latter option by running a Kafka CLI command with the --help option, like so:

$ kafka-console-consumer

This tool helps to read data from Kafka topics and outputs it to standard output.

Option Description

------ -----------

--bootstrap-server REQUIRED: The server(s) to connect to.

--consumer-property A mechanism to pass user-defined

properties in the form key=value to

the consumer.

--consumer.config Consumer config properties file. Note

that [consumer-property] takes

precedence over this config.

[...]This is a great resource when you need to look up an option you think you might be using incorrectly or want tips on correctly using a given command line option. For example, you can set security credentials for services like Kerberos by passing a custom configuration file with the --consumer.config option.

Alternative solutions

Kafka was revolutionary for its time, but in recent years other software has arisen that builds on the foundations initially laid down by Kafka. For example, Redpanda Keeper (Redpanda’s CLI) offers substantial improvements over the traditional Kafka console commands:

- Spin up a local cluster with a single command (see here)

- Go-based plugin capability (example)

- Kernel tuner and disk performance evaluator

- Easy to manage cluster health and status.

The event streaming world has extremely high requirements for the systems engineers choose to rely on, and astute admins may want to look at newer solutions that are solving new challenges in the space.

[CTA_MODULE]

Conclusion

Kafka makes it quick and easy to manually launch a consumer via the terminal. Though this feature is dangerous and ill-advised in production, it’s an important tool for resolving edge cases that Kafka engineers will inevitably encounter. We wrote this article to serve as a reference for engineers like you who find themselves in just such a situation.